Table of Contents

Contextual Engineering: Designing Intelligent Systems That Actually Understand You

Author

Date

Book a call

Ever feel like your AI assistant answers your questions—but never truly understands you? That’s because most AI systems today operate in isolation. Without context, even the most advanced large language models (LLMs) behave like amnesiac geniuses—brilliant, but forgetful.

Welcome to the emerging discipline of Contextual Engineering, where context isn’t an afterthought—it becomes the very foundation of the system.

The age of AI is no longer defined by model size alone—it’s increasingly about smarter, more meaningful interactions.

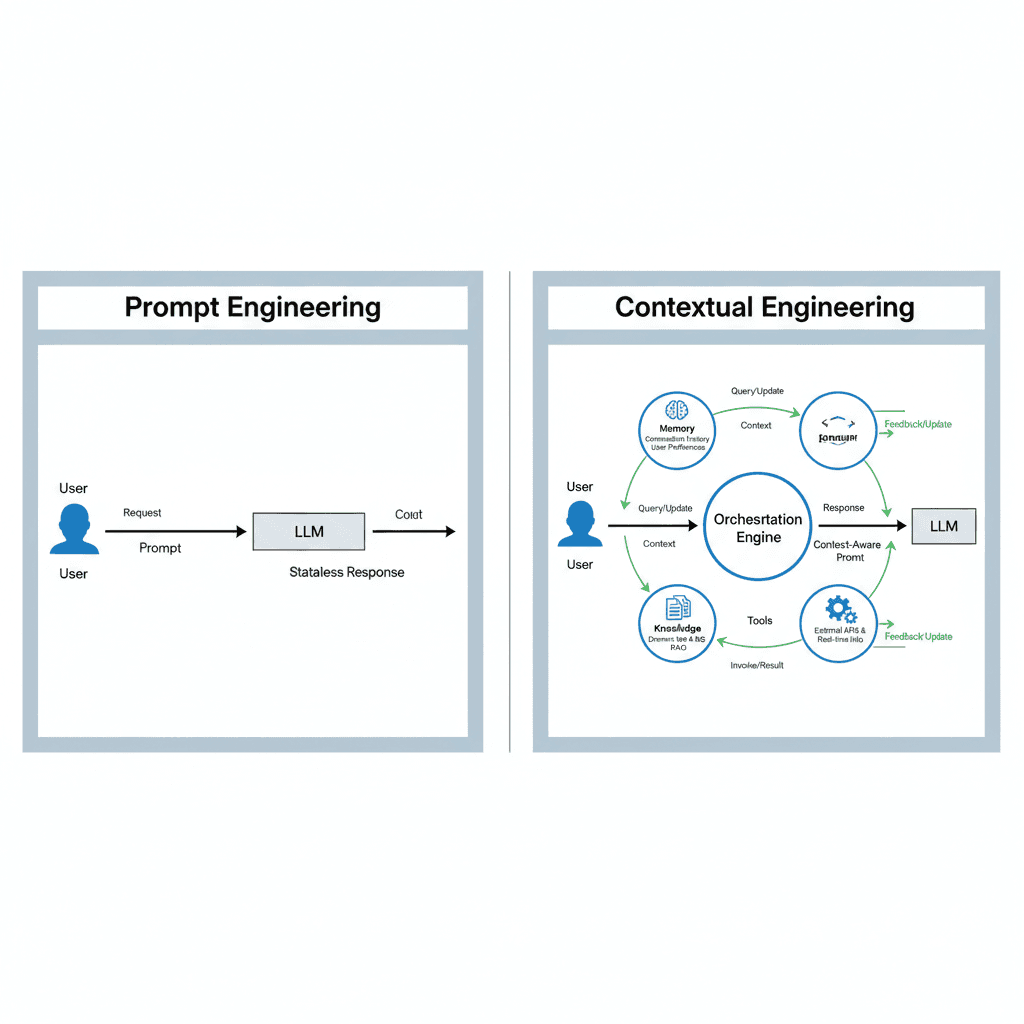

For years, developers relied on prompt engineering—crafting precise instructions to nudge LLMs into useful behavior. This worked for experimentation and small tasks, but the cracks appeared quickly in real-world applications: limited personalization, lack of memory, and brittle workflows.

What Is Contextual Engineering?

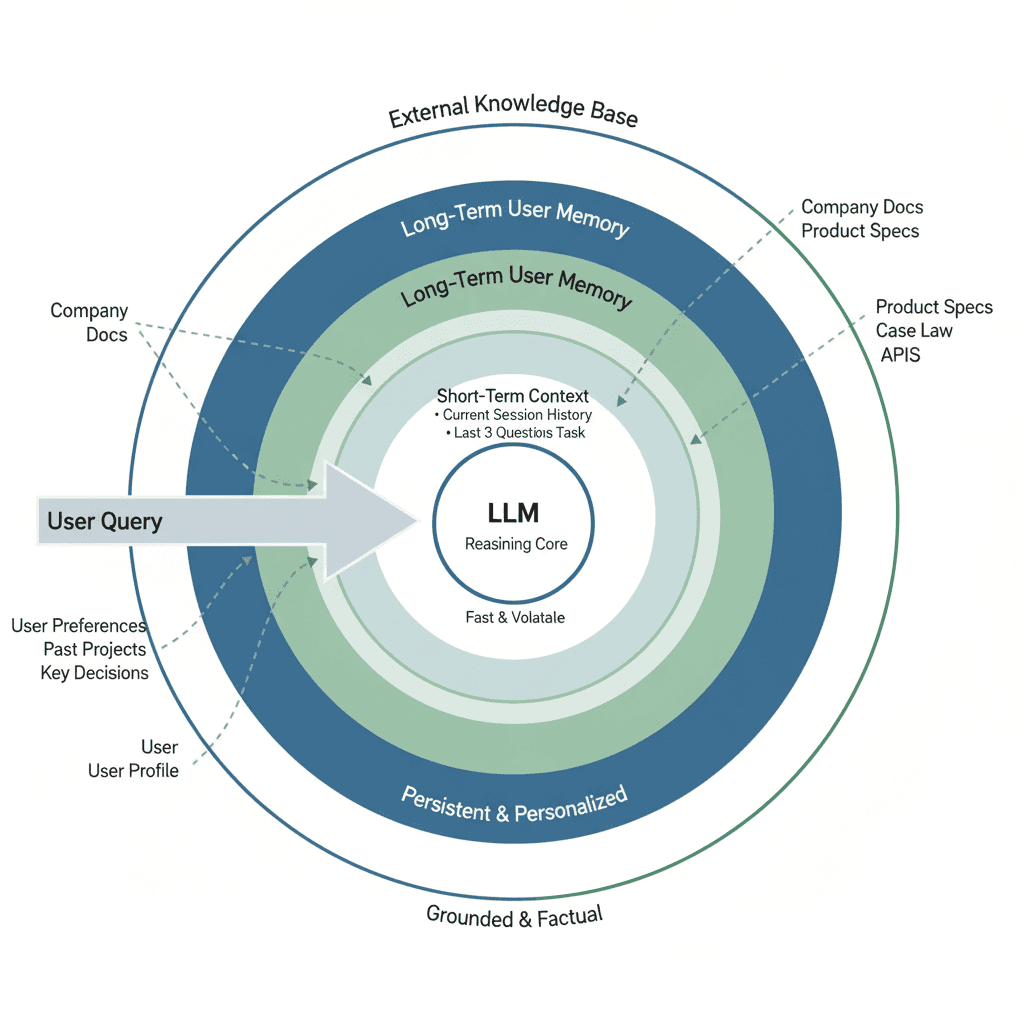

Contextual Engineering is the discipline of embedding rich, evolving context into AI systems so they can act intelligently across sessions—not only within a single interaction.

It considers multiple layers of context:

- User identity and preferences → personalization

- Task goals and progress → long-term reasoning

- Conversation history → continuity

- Domain-specific knowledge → accuracy in specialized fields

- External tools and environment → real-world adaptability

In practice, this means designing memory pipelines, retrieval strategies, and context-refresh mechanisms rather than manually hacking prompts.

Why Context Matters: The Role of Context Size

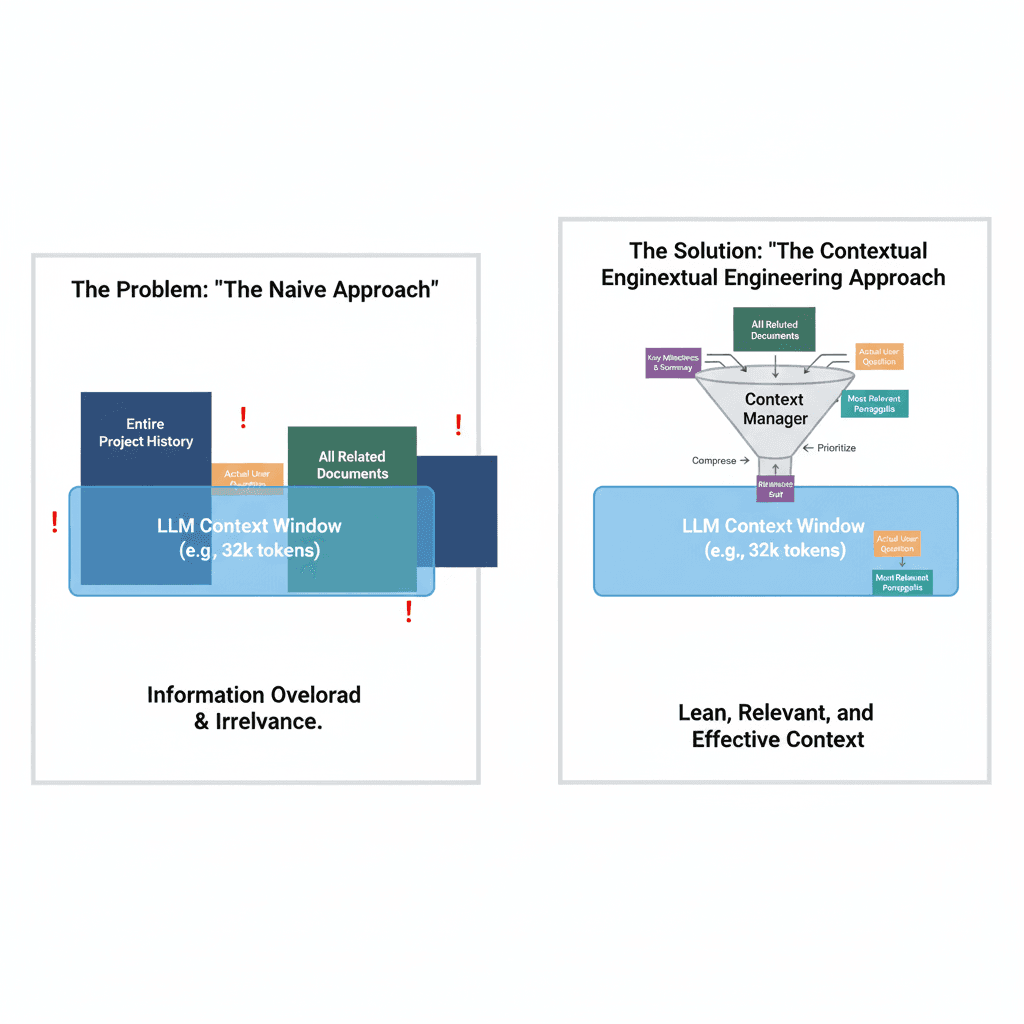

At the heart of this shift is a critical insight: LLMs don’t inherently understand continuity—they operate within token limits.

What Is Context Size?

Every model has a maximum context window:

- GPT-3.5 → ~4,000 tokens

- GPT-4 →Up to 128,000 tokens in the latest high-context versions

- Claude 4→ ~Up to 1,000,000 tokens for specific use cases

These numbers are large but finite. Even with 200k tokens, you can’t load an entire textbook, project history, and knowledge base simultaneously.

The Challenge

- Too little context → the model forgets key details

- Too much context → irrelevant or noisy input overwhelms the model

The Solution

Contextual Engineering designs smart context managers that:

- Filter what matters most right now

- Compress long histories into summaries

- Refresh context dynamically as new goals emerge

Why Prompt Engineering Isn’t Enough

Prompt engineering taught us a lot, but it assumes:

- Every question is independent (stateless)

- The model has no recall of past tasks

- Inputs can be handcrafted every time

In real-world applications, this falls apart. Consider:

- Healthcare: A digital health assistant must remember medical history across sessions.

- Finance: A portfolio tracker must adapt to risk tolerance over time.

- Legal: An AI paralegal must recall earlier case references.

Without structured memory and continuity, users experience frustration, redundancy, and inefficiency.

A More Human-Like Model of Interaction

Humans communicate by carrying context forward:

- We don’t reset conversations every time we speak

- We adapt based on prior knowledge of people and tasks

- We build shared memory over time

Contextual Engineering mirrors this by combining:

- Short-term memory (session-level history)

- Long-term memory (persistent facts, preferences, knowledge)

- Real-time situational awareness (environment and tools)

For enterprises, this means customers don’t have to repeat themselves every time they interact with a support bot. For researchers, it means queries can build upon prior discoveries. For individuals, it feels like AI finally “knows” them.

Case Study: Building a Context-Aware Research Assistant

Imagine designing an AI for academic literature review:

- Ingest Sources → Parse and semantically index papers.

- Track Goals → Persist with the researcher’s topic across sessions.

- Inject Memory → Bring back prior questions and notes.

- Summarize & Compare → Offer evolving synthesis, not isolated answers.

- Manage Context Window → Use scoring to decide which citations stay in focus.

The result: a dynamic assistant that evolves alongside the researcher instead of resetting each session.

From Inputs to Intelligent Systems

The future of AI is not about bigger models or clever prompts—it’s about architected intelligence.

Contextual Engineering is emerging as the backbone of enterprise AI, enabling systems that:

- Carry forward memory

- Adapt to user identity and goals

- Scale across teams and organizations

- Deliver consistent, reliable outputs

Conclusion

AI that forgets is useful, but limited. AI that remembers and adapts becomes transformative.

Contextual Engineering is not a buzzword—it’s a design philosophy reshaping how we build intelligent systems. It moves us from stateless interactions to context-driven architectures that feel natural, reliable, and human-like.