Table of Contents

K3s in Action

Author

Date

Book a call

K3s in Action: Why We Chose It First, and How It Scales with Us

Low Learning Curve for Devs and Infra Teams

- Bundled core Kubernetes binaries into a single binary under ~100MB.

- Uses SQLite or lightweight etcd for the datastore.

- Designed to run on minimal hardware (512MB RAM is sufficient for simple clusters).

- Built-in load balancer, local storage, and simplified TLS handling.

- Developers to run the same orchestrator locally as in staging or production.

- Teams to quickly boot up environments on edge VMs or low-cost cloud instances.

- The DevOps team to focus engineering efforts on security, CI/CD, and system design—without spending time debugging the Kubernetes control plane.

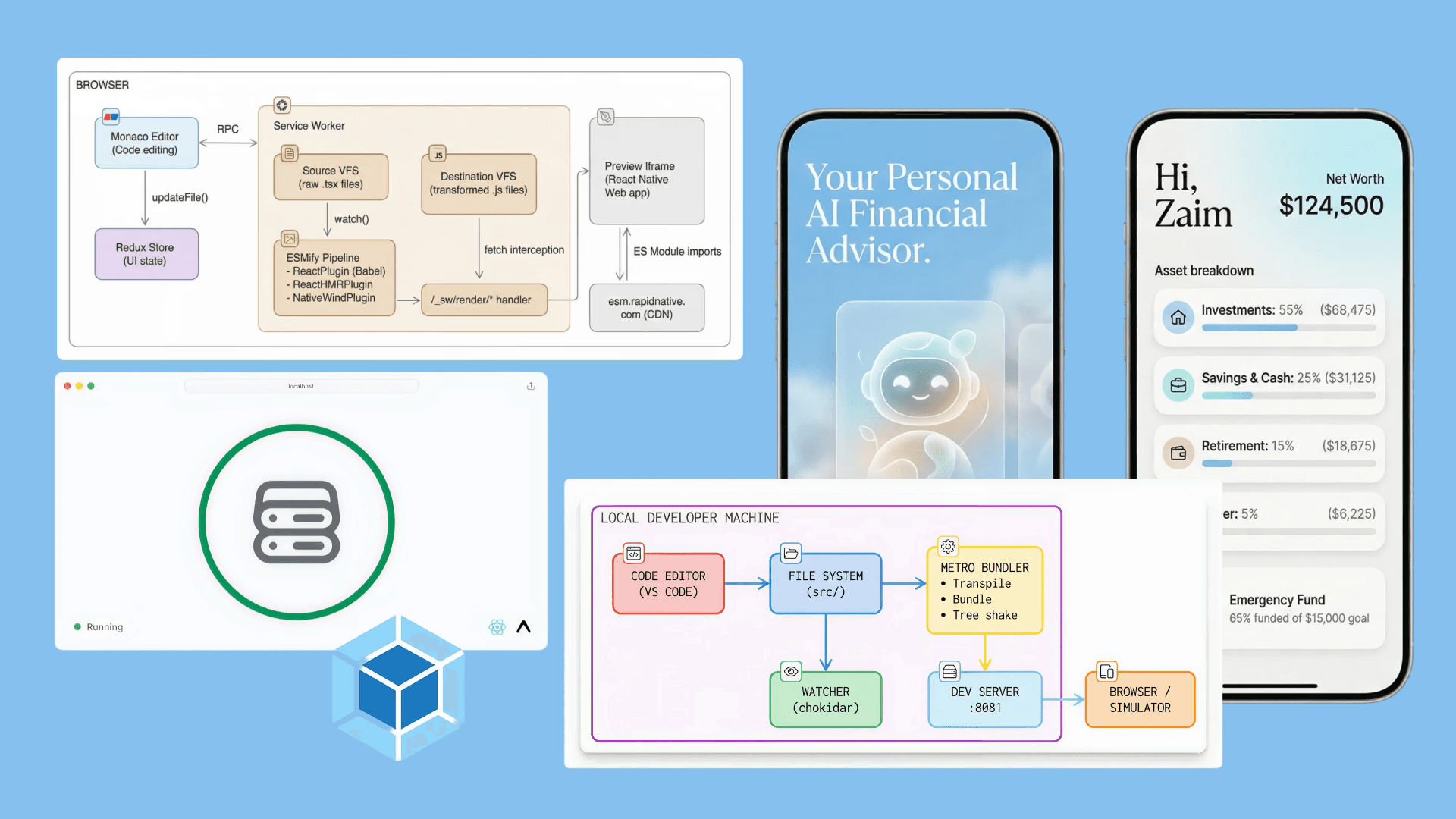

How We Use K3s Technically

Bootstrapping a Cluster

- We use Traefik (bundled) or nginx-ingress depending on team preference.

- cert-manager issues TLS certs via Let’s Encrypt.

- Internal DNS handled by CoreDNS.

CI/CD Integration

CI/CD Flow Overview

- Step 1: Build & Push Artifacts

- Step 2: Helm/Manifest Deployment

- Step 4: Health Checks & Rollbacks

Security and Observability

- TLS bootstrapping, rotation, and etcd encryption are handled automatically by K3s.

- We ship logs via Fluent Bit to a central Loki/Grafana stack.

- Prometheus scrapes metrics from pods and node exporters.

What Worked Well

Dev-Prod Parity

Speed + Simplicity

Lower Operational Burden

- No kubeadm complexities.

- Easier node recovery (just re-run the agent install).

- Control plane restarts or config reloads take seconds—not minutes.

Planning to Scale

- We move into multiple regions or AZs.

- RPS starts going above expected thresholds.

- We need tighter integrations with AWS-native services (like ALB Ingress or IRSA for IAM roles).

| Phase | Cluster Design | Notes |

|---|---|---|

| Now | K3s (HA clusters) | Lightweight, fast iterations, internal + some external services

|

| Mid | K3s + EFS/EBS + External DB

| Add managed storage, move DBs out of cluster

|

| Scale | Migrate to EKS | Keep manifest compatibility, adopt autoscaling, ALB, IAM roles, etc.

|

We’re designing our Helm charts, manifests, and secrets management to be cloud-agnostic, so EKS migration is mostly about bootstrapping infra—not rewriting workloads.

Why It’s Not Just a Dev-Test Cluster

- Handles read-heavy APIs under load.

- Hosts staging + internal sandbox environments.

- Developer bootstrapped clusters are used to test real IaC/CD flows.

End to End flow

TL;DR:

- K3s helped us deploy fast and stay production-ready for MVPs and internal tooling.

- Simple setup, dev-prod parity, and low ops overhead made it ideal.

- We're future-proofing with Helm/manifest reusability for EKS migration when scale hits.

Final Thoughts

- CI/CD automation

- Observability

- Multi-tenancy

- Secrets and security