Table of Contents

Designing A Real-Time AI Pipeline For Human-like Video Conversations

Author

Date

Book a call

Introduction

The Vision: Modular, Real-Time Video AI for the Next Generation

- That modern pipelines like pipecat can orchestrate complex AI flows with plug-and-play flexibility.

- WebRTC and Next.js can deliver seamless, real-time user experiences in the browser.

- That integrating video AI into conversations is not just possible, but practical for future products.

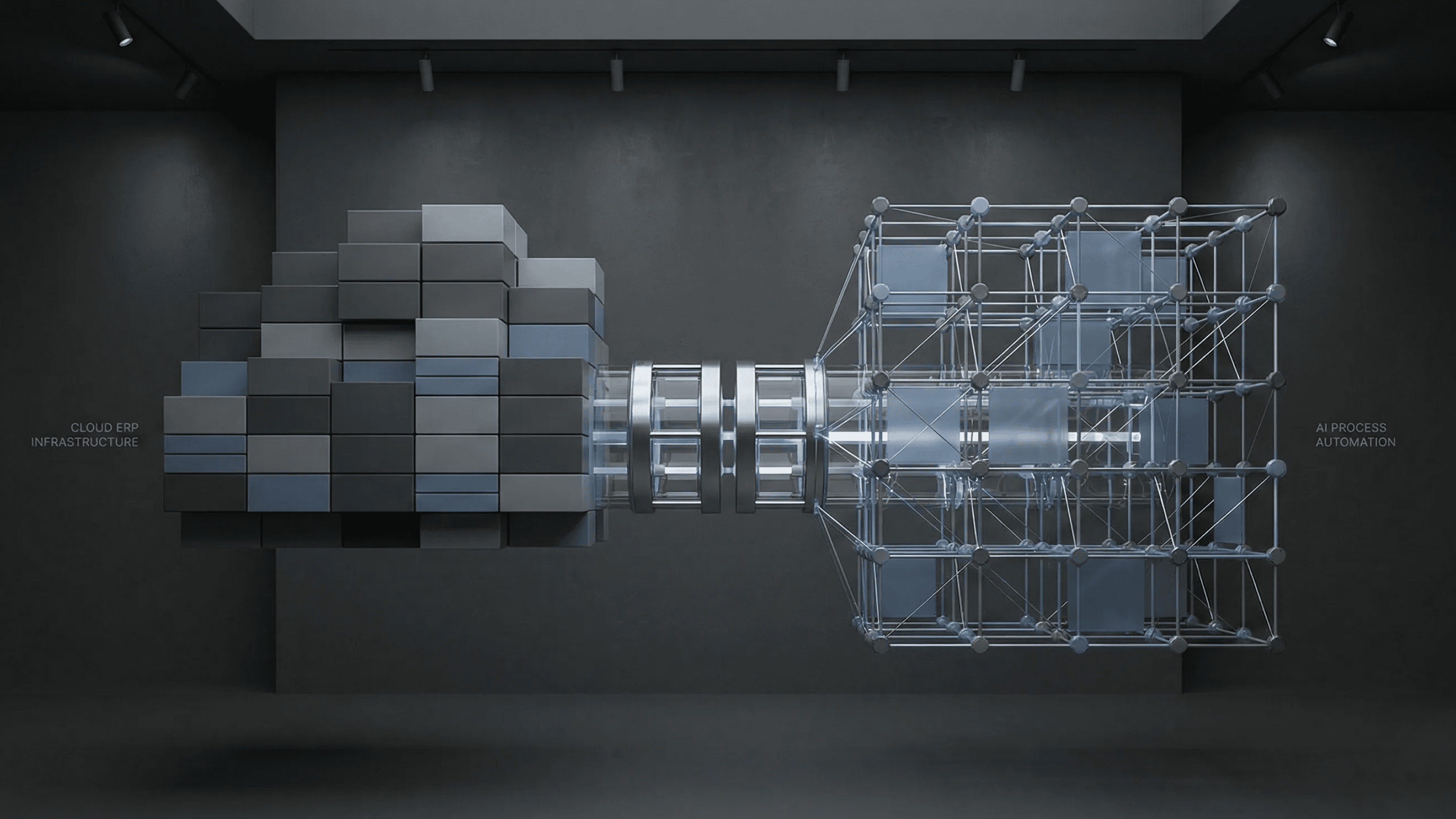

Architecture Overview

Key components:

- Frontend: Next.js 13 (React 18) for fast, interactive UI and SSR capabilities.

- Backend: FastAPI (Python) for async signaling, static file serving, and API endpoints.

- Media Transport: SmallWebRTCTransport (via pipecat) abstracts away ICE/SDP headaches and enables real-time audio/video.

AI Services:

- STT: Deepgram Streaming for sub-300ms transcription.

- LLM: Google Gemini for long-context, high-accuracy dialogue.

- TTS: Cartesia for natural, high-fidelity speech.

- Video: Tavus for fast, lip-synced avatar generation.

Building the Pipeline

At the heart of our proof-of-concept is a Pipecat driven, modular media pipeline that moves seamlessly from browser-captured audio/video to a fully rendered, lip-synced AI avatar — and back again — all in real time.

Why Pipecat?

- Plug-and-Play Components – Swap STT, LLM, or TTS modules without touching the rest of the pipeline.

- Back-Pressure Awareness – Dynamically adapts to load, preventing buffer overflows and ensuring smooth audio/video playback even under high concurrency.

- Frame-Level Observability – Emits granular metrics per stage (e.g., STT delay, LLM token generation speed, TTS synthesis time) for proactive performance tuning.

- Extensible by Design – Adding emotion detection, sentiment scoring, or domain-specific reasoning is as simple as inserting another pipeline block.

WebRTC + Next.js: Real-Time Frontend Stack

- WebRTC for Media Transport – Enables direct, low-latency audio/video streaming between the browser and backend, reducing round-trip delays compared to traditional HTTP-based media flows.

- Next.js 13 + React 18 – Gives us server-side rendering (SSR) for initial load speed, concurrent React for responsiveness, and a modern developer experience for rapid iteration.

- Media I/O Integration – Our React components handle microphone, camera, and playback streams while seamlessly interfacing with the WebRTC transport layer.

Video AI Integration: Beyond Voice:

- Lip-Synced, Expressive Avatars – Matches generated speech perfectly to facial movement, making interactions more natural and engaging.

- Low Overhead, High Impact – Video synthesis is batched and streamed back with minimal latency overhead (~500–1000 ms), preserving conversational flow.

- Scalable Personalization – Avatars can be branded, personalized per user, or adapted to specific cultural and linguistic contexts.

Real-Time AI Conversation Pipeline: Frame & Packet Flow

High-Level Latency Overview

| Component | Service | Typical Latency | Notes |

|---|---|---|---|

STT

|

Deepgram Streaming

|

~200–30 ms | Ultra-low latency transcription from audio to text under optimal network conditions.

|

|

LLM

|

Google Gemini

|

~200–500 ms

| Latency depends on token count and compute provisioning; optimized APIs or batching help reduce time.

|

|

TTS

|

Cartesia

|

~200–400 ms

| Generates high-fidelity, natural-sounding speech.

|

|

Video

|

Video

|

~500–1,000 ms

| Fast lip-synced avatars; varies with resolution, duration, and GPU provisioning.

|

High-Level Pricing Overview

| Component | Pricing Model | Accurate Cost (per minute) | Notes |

|---|---|---|---|

|

Deepgram STT

|

Standard streaming tier: $0.08 per audio minute

|

$0.08

|

Published list price for Speech-to-Text API streaming mode.

|

|

Google Gemini

|

Gemini 2.5 Flash paid tier: $0.30 input + $2.50 output per 1M tokens (~750 tokens ≈ 1 min)

|

$0.0041

|

(0.30 / 1,000,000) × 750 + (2.50 / 1,000,000) × 750 ≈ $0.0041/min.

|

Cartesia TTS

|

Startup plan: $49/month for 1.25M credits (1 credit = 1 char; ~750 chars ≈ 1 min)

|

$0.0294

|

$49 / (1,250,000 ÷ 750) ≈ $0.0294 per minute of TTS at Startup tier.

|

|

Tavus Video

|

Starter plan video generation overage: $1.10 per minute

|

$1.10

|

Pay-as-you-go overage rate for AI video generation minutes beyond included quota.

|

|

Total

| $1.2135/min

| Sum of individual per-minute costs. |

Scope and Reuse: Where This Pipeline Can Go

Demo in Action: AI Conversational Interview

Other High-Impact Applications

Customer Support

Healthcare and Therapy

Education and Training

Conclusion: Takeaways for Builders & Visionaries

- Modularity is leverage – Pipelines like Pipecat let you adapt, swap, and scale at the speed of innovation.

- Latency is UX – Every 100 ms shapes the user’s experience. Tune it like your product depends on it because it does.

- Observability wins – Measure everything, or you’re flying blind.

- Video is the next frontier – Human-like avatars are now practical, scalable, and game-changing.

Whether you are building the next breakthrough or deploying AI to transform your business, now is the time to act.

Hope you find this article useful. Thanks and happy learning!