Table of Contents

Offline-First Flutter: Implementation Blueprint for Real-World Apps

Author

Date

Book a call

Part I: Core Architecture & Data Persistence

The Offline-First Philosophy

Offline-first is an architectural paradigm that treats network connectivity as an enhancement rather than a prerequisite. It represents a fundamental shift from the traditional "online-first" model, where an active internet connection is assumed. In this paradigm, the local device database becomes the primary, authoritative source of truth, enabling the application to remain fully functional, performant, and reliable regardless of network status. This approach prioritizes local data access, delivering instantaneous user feedback and leveraging asynchronous synchronization to eventually align with a remote server.

Adopting this model is a foundational decision that impacts the entire application stack, from UI and state management to backend API design. It is not an incremental feature to be added later but a core design principle that must be established from the outset. Retrofitting offline capabilities onto an online-first application often requires a complete and costly re-architecture because the fundamental data flow is inverted. The benefits extend beyond simply functioning without a connection; by eliminating network latency from the user's critical path, the app feels significantly faster and more responsive even on high-speed networks. This builds a deep sense of trust and reliability, as user-generated data is always captured and never lost due to a dropped connection.

Key Principles:

- Local Data is Primary: The on-device database is the application's source of truth. The UI reads from and writes to the local database directly, making interactions instantaneous. The server is treated as a secondary replica.

- Network is for Synchronization: The network is used opportunistically and asynchronously to sync local changes with a remote backend and to receive updates from other clients. This process happens in the background, decoupled from the user interface.

- Seamless UX: The application provides a fast, reliable, and uninterrupted experience. By eliminating network-induced delays for most operations, the ubiquitous loading spinner becomes a rare exception rather than the norm, leading to a superior user experience.

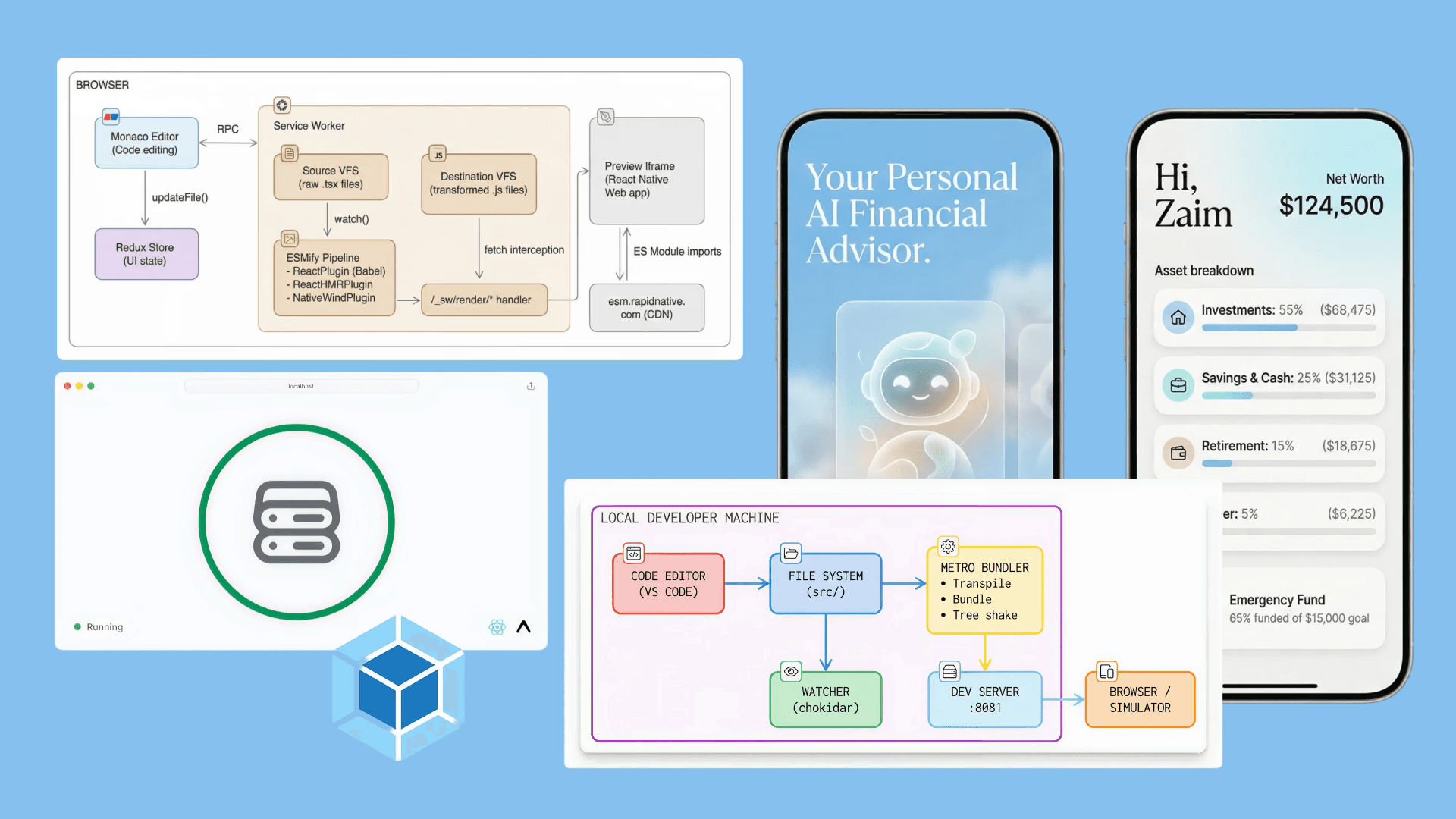

Architectural Blueprint: The Data Flow

A robust offline-first architecture requires a clear separation of concerns, managing the flow of data between the UI, a central repository, the local database, and a background synchronization engine. Each layer has a distinct responsibility, creating a modular and testable system.

Architecture Diagram: User Action Write Procedure:

Architecture Diagram: User Action Read Procedure:

- UI Layer: The user interacts with widgets. Actions (e.g., button presses, form submissions) are sent to the state management layer as events or function calls. This layer is responsible for presentation only and contains no business logic.

- State Management (BLoC/Riverpod): Manages UI state and communicates user intent to the Repository. It subscribes to data streams from the repository to update the UI reactively whenever data changes, without needing to know the origin of the change.

- Repository Pattern: Acts as a data gatekeeper and a facade for the entire data layer. It abstracts the data sources, providing a clean, consistent API for the application's business logic. Its primary responsibility is to orchestrate the flow of data between the application and the local database. This abstraction is key to maintainability and allows the underlying database technology to be swapped without affecting the rest of the app.

- Local Database: The on-device source of truth (e.g., Drift, Isar). It stores all application data, user-generated content, and the synchronization queue. Its performance is critical to the overall responsiveness of the application.

- Sync Engine: A background process, entirely decoupled from the UI, that is responsible for communicating with the remote API. It pushes a queue of local changes to the server and pulls remote updates, writing them directly into the local database. The UI then updates automatically via its reactive stream from the repository.

- Remote API: The backend server that persists data centrally and communicates with other clients. Its design must support an offline-first client, for instance, by accepting batched changes, handling versioning to aid in conflict resolution, and providing endpoints for delta-based synchronization (sending only what has changed).

Choosing a Local Database

The choice of a local database is a critical, long-term decision that heavily influences performance, development complexity, and scalability. The primary trade-off is between relational (SQL) and non-relational (NoSQL) data models. For production-grade Flutter apps, native-backed databases (using C, Rust, etc.) consistently outperform pure-Dart solutions for computationally intensive tasks, making them the recommended choice.

Database Technology Comparison:

| Feature | Drift (SQLite) | Isar | ObjectBox |

|---|---|---|---|

|

Data Model

| Relational (SQL) | NoSQL (Object) | NoSQL (Object) |

| Strengths | Type-safe SQL, complex queries, robust migrations, and reactive streams. | Fast performance, rich query API, multi-isolate support. | Extremely fast, built-in sync solution (commercial), ACID compliance. |

| Use Case | Complex, structured data; enterprise apps requiring data integrity. | Semi-structured data requires fast, complex queries. | High-performance needs; teams wanting a pre-built sync solution. |

| Encryption | Integrates with sqlcipher_flutter_libs. | No built-in support | Transport-level encryption for Sync; manual field encryption. |

Performance Benchmark Comparison (Lower is Better)

Performance benchmarks, measured in milliseconds, show that writing 1000 batch objects took 47ms for Drift, 8ms for Isar, and 18ms for ObjectBox. This test was conducted on a macOS application, and the code is available on GitHub.

Decision Framework:

- For Maximum Type Safety & Relational Integrity: Choose Drift. It is ideal for applications with complex, structured data where query safety, explicit schemas, and robust, testable migrations are paramount. The compile-time generation of type-safe code eliminates an entire class of runtime errors common with raw SQL.

- For High-Performance NoSQL: Choose Isar or ObjectBox. Both are excellent for semi-structured or object-oriented data models. Isar offers a powerful, open-source solution with flexible indexing and querying. ObjectBox provides top-tier performance, true ACID guarantees through MVCC, and a compelling commercial sync engine that can save significant development time. The choice may come down to budget vs. reliance on community maintenance.

Part II: The Repository & Sync Engine

The Repository Pattern: Your Data Gatekeeper

Read/Write Lifecycle:

2. Data Writes: The Optimistic UI

When the user creates, updates, or deletes data, the change is written directly to the local database. Because the UI is already watching the reactive stream from step 1, it updates instantly, creating a fast, "optimistic" experience.

- User Action: The user taps a button to add a new task.

- Repository Write: The repository's addTask method is called, which inserts the new task directly into the local database.

- Stream Emits: The .watch() stream (from step 1) detects the database change and automatically emits a new, updated list of tasks.

- UI Updates: The StreamBuilder, listening to the stream, receives the new list and rebuilds itself to display the new task.

This entire cycle happens almost instantaneously, without any loading spinners or network delays.

The Sync Engine: Ensuring Reliability

The Sync Engine reliably transmits local changes to the remote server and processes incoming updates. Its design must be resilient to network failures, app crashes, and other interruptions to guarantee that no user data is ever lost.

Core Components:

1. Transactional Outbox Pattern: This powerful pattern solves the "dual write" problem, where an app might save to the local database but crash before making the API call, leading to lost data. When a user saves a change, two operations occur within a single, atomic database transaction:

- The data is written to its primary table (e.g., tasks).

- A corresponding event describing the change is written to a sync_queue table. This atomicity guarantees that the intent to sync is never lost. The sync_queue becomes a durable, persistent to-do list for the sync engine. A typical queue entry would contain a payload (the data to sync), an action type (CREATE, UPDATE, DELETE), and a retry counter.

The Sync Engine: Processing the Outbox

The sync engine is the component that processes the sync_queue table created by the Transactional Outbox Pattern. Here's how it works:

Architecture:

Key Features:

5. API Integration: The engine calls your backend API:

Integration with Existing Components:

- Transactional Outbox: Creates entries in sync_queue

- Background Worker: Processes entries from sync_queue

- Exponential Backoff: Implemented in the retry logic

- WorkManager: Can trigger the sync engine on network changes.

Why Database Table Instead of Traditional Queue?

| Traditional Queue | Database Table (Our Approach) |

|---|---|

| Lost on crash | Survives app crashes |

| Memory only | Persistent storage |

| No retry tracking | Built-in retry counter |

| No status history | Full audit trail |

| Complex setup | Simple SQL queries |

Example Flow:

1. User adds task → Transaction writes to both tables

2. Sync Engine polls every 30s → Finds pending items

3. Calls API for each item → Updates status (synced/failed)

4. Failed items retry up to 3 times → Then marked as failed

2. Background Worker: A dedicated background process is responsible for processing the sync_queue. Flutter's workmanager package is ideal for this, as it can schedule tasks that persist across app restarts.

- The worker runs independently of the UI lifecycle, often triggered by network connectivity changes or on a periodic schedule.

- It queries the sync_queue for pending jobs.

- It attempts to execute the API call for each job.

- On Success: The job is deleted from the sync_queue.

- On Failure: The job remains in the queue, and its retry count is incremented. The worker should use WorkManager constraints, like requiring an active network connection, to avoid unnecessary work and conserve battery.

3. Exponential Backoff & Retry Logic: Network requests can fail for transient reasons. The worker should not immediately retry a failed request. Implement an exponential backoff strategy with jitter:

- After the 1st failure, wait (2^1 + random_milliseconds) seconds.

- After the 2nd failure, wait (2^2 + random_milliseconds) seconds, and so on, up to a maximum delay.

- This prevents overwhelming a struggling server and avoids a "thundering herd" problem where many clients retry simultaneously. The retry_count column in the sync_queue table tracks this.

Sync Engine Implementation

For our demo implementation, we use a simpler approach with Timer.periodic() for development purposes. In production, you would use WorkManager as described above.

The complete sync engine code is available on this GitHub page.

Part III: Production Robustness

Conflict Resolution Strategies

A conflict occurs when the same piece of data is modified locally and on the server before a sync can occur. This is one of the most complex challenges in distributed systems. The chosen strategy depends entirely on your application's data and business rules, representing a trade-off between simplicity and data preservation.

A Spectrum of Solutions:

- How it Works: The record with the most recent timestamp (or highest version number) wins. The other change is silently discarded.

- When to Use: Simple, non-collaborative data where data loss is acceptable (e.g., a user's personal settings, a "last read" timestamp). It's the easiest strategy to implement.

- Risk: Can lead to silent data loss. For example, a user could spend ten minutes writing a detailed note offline, only to have it overwritten by a trivial, one-word change made more recently by another user.

- How it Works: The client sends its change to the server along with the data version it is based on. The server detects the conflict and applies domain-specific rules to merge the data. For example, if one user updates a contact's phone number and another updates the address for the same contact, the server can intelligently merge both non-overlapping changes into a single, coherent record.

- When to Use: Structured data where changes are often non-overlapping. This requires more sophisticated backend logic and potentially a more granular API (e.g., using JSON Patch instead of sending the entire object).

- How it Works: These are mathematically designed data structures that can be merged in any order and are guaranteed to eventually converge to the same state, eliminating conflicts by design. There are different types of CRDTs for different data (counters, sets, text, etc.).

- When to Use: Highly collaborative, real-time applications like shared documents (e.g., Google Docs), whiteboards, or multi-user design tools. They can be complex to implement correctly, but provide very strong consistency guarantees, especially in peer-to-peer or decentralized systems. The crdt package in Dart provides foundational building blocks for this approach.

Security: Protecting Data at Rest

Storing application data locally introduces the critical responsibility of protecting it from unauthorized access if a device is lost, stolen, or compromised. Security must be implemented in layers, as a single point of failure can compromise the entire dataset.

A Layered Security Model:

- Problem: Storing highly sensitive data like JWTs, API keys, or database encryption keys in plain text (e.g., SharedPreferences) is a major vulnerability, as these files can be easily read on a rooted or jailbroken device.

- Solution: Use the flutter_secure_storage package. It leverages the platform's native, hardware-backed secure elements: the Keychain on iOS and the Keystore on Android. These systems store secrets in a separate, encrypted, and sandboxed location, often protected by hardware, ensuring that even the application itself cannot read the key out of memory.

2. Encrypting the Database:

- Problem: Even if an app is not running, the main database file (e.g., app.db) exists on the filesystem and can be copied and analyzed by an attacker with sufficient device permissions.

- Solution: Encrypt the entire database file itself using a strong key.

- Drift/SQLite: Use sqlcipher_flutter_libs, an open-source extension that provides transparent, full-database 256-bit AES encryption. It is the industry standard for securing SQLite.

- Hive: The community edition (hive_ce) has built-in AES-256 encryption support that can be enabled per-box.

- Isar/ObjectBox: Currently lacks robust, built-in, full-database encryption, a critical limitation that must be carefully considered for applications handling sensitive or regulated data (e.g., PII, PHI).

Part IV: Integration & Best Practices

Integration with State Management (Riverpod & BLoC)

The key to a clean architecture is maintaining a strict separation of concerns. The state management layer should be "dumb" about connectivity and data sources; its sole responsibility is to translate user actions into calls to the repository and to transform data streams from the repository into UI state.

Riverpod Pattern:

- Dependency Injection: Provide the singleton repository and database instances to the widget tree using Provider. This makes them globally accessible and easily mockable for testing.

- Data Exposure: Use a StreamProvider to listen to the repository's .watch() stream. This is the most idiomatic way in Riverpod to handle reactive data sources.

- UI Consumption: Use ref.watch(myStreamProvider) inside a widget. Riverpod automatically handles the stream's lifecycle and exposes its state through an AsyncValue object, which elegantly handles the .data, .loading, and .error cases, preventing unhandled exception errors in the UI. The UI will automatically and efficiently rebuild only when new data is emitted.

BLoC Pattern:

- Dependency Injection: Use get_it for a service locator approach or context.read() within a BlocProvider to provide the repository instance to the BLoC/Cubit.

- Data Exposure: In the BLoC's constructor, create a StreamSubscription to the repository's data stream. In the onData callback, the BLoC can add an event to itself or directly emit a new state containing the updated data. Crucially, this subscription must be cancelled in the BLoC's close() method to prevent memory leaks.

- UI Consumption: Use a BlocBuilder or BlocListener to listen for new states and rebuild the UI accordingly. For optimistic UI rollbacks, the repository method can throw an exception on a permanent sync failure, which the BLoC can catch in a try-catch block and use to emit a specific failure state.

Final Best Practices & Scaling

- Initial Sync Strategy: For apps with large remote datasets, downloading everything on the first launch is infeasible. Implement a more sophisticated strategy: fetch a critical initial subset of data first, then lazy-load the rest as the user navigates the app. For subsequent syncs, use delta-based fetching (requesting only data that has changed since the last sync timestamp) to minimize data transfer. Always provide clear progress indicators to the user during any significant sync process.

- Managing Storage Bloat: An offline database can grow indefinitely. Implement data pruning strategies to remove old or irrelevant data (e.g., keep only the last 30 days of messages). A Least Recently Used (LRU) cache policy can be applied to non-essential data. It's also good practice to provide users with settings to see how much space the app is using and an option to clear caches.

- Testing:

- Unit Tests: Mock the repository to test your BLoCs, Notifiers, and other business logic in complete isolation from the database and network.

- Integration Tests: These are critical for an offline-first app. Write automated tests that verify the entire data pipeline: simulate toggling the network on and off while writing data, verify that the outbox queue is correctly populated and processed, test your retry logic by mocking API failures, and validate your conflict resolution strategy by seeding the database with conflicting data.