Table of Contents

AI Fraud Detection in Fintech Apps: ROI, Risk Reduction & Compliance Gains

Author

Subject Matter Expert

Date

Book a call

Can AI really solve fintech’s fraud problem? Or are we just automating our way into more expensive mistakes? The answer lies in the gap between PayPal’s 0.17% fraud rate using AI and Block Inc.’s $80 million fine.

Key Takeaways

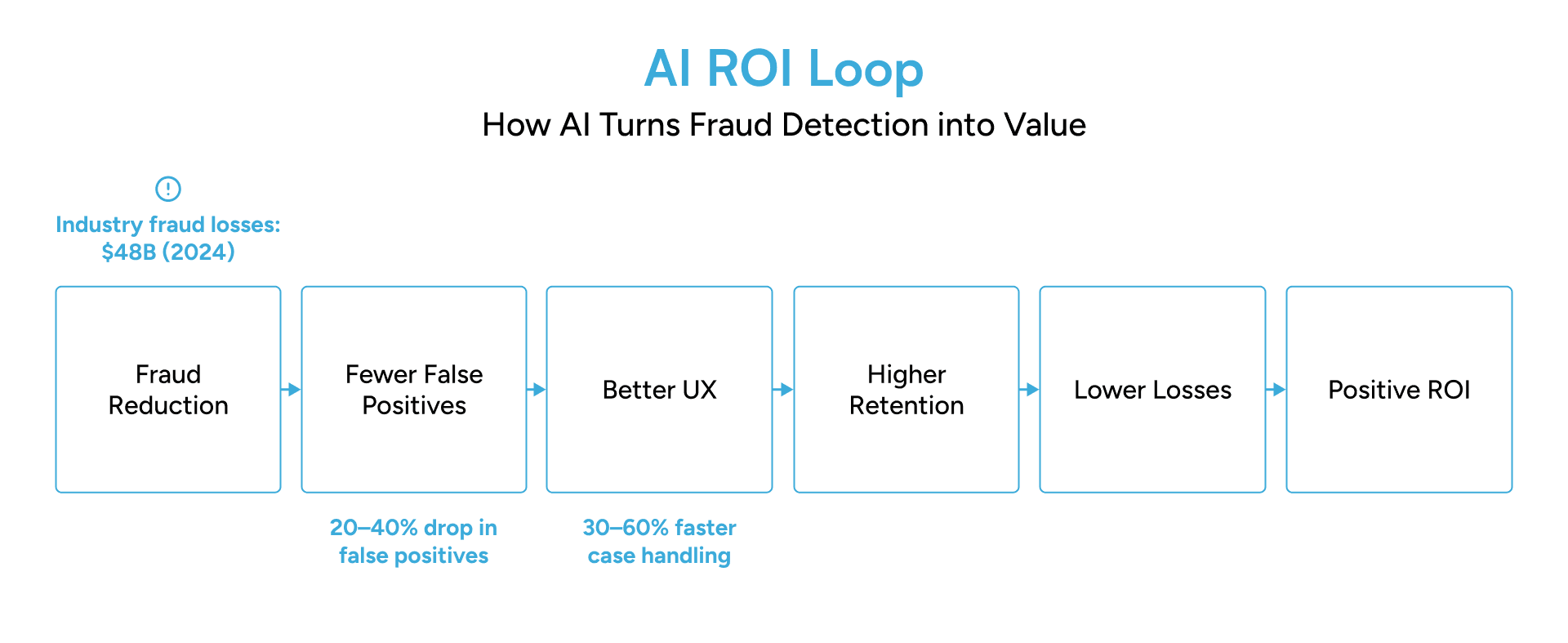

- AI reduces fraud losses by up to 50% through real-time detection, and Fintechs like PayPal & Mastercard prove measurable ROI with adaptive AI.

- With AI Fraud Detection, false positives go down by 30–40% and dispute costs by 25–50%.

- Streaming ML and Graph AI enable millisecond-level detection. While XAI dashboards and Model Cards make AI audit-ready for AML/KYC.

- A 6–10 week pilot delivers measurable savings before full rollout for AI Fraud Detection in Fintech apps.

Why FinTech companies are using AI Fraud Detection

Saurabh Sahu

CTO, GeekyAnts

AI has become the core of proactive risk management, enabling FinTech to protect users, stay compliant, and maintain trust, without slowing transactions.

ROI of AI Fraud Detection: How much can you save, and earn more?

1. Fewer False Positives → Lower Operational Cost

2. Fewer False Negatives → Reduced Fraud Losses

3. Faster Case Handling → Greater Efficiency

4. Fewer Dispute Losses → Direct Savings

5. Higher Customer Trust → Long-Term Retention

6. Lower Compliance Cost → Audit-Ready Confidence

The ROI Equation, Simplified

Loss Prevention to Value Creation

Risk Reduction Through AI

Legacy rules and human review can not keep up with multi-channel attacks and cross-product rings because Fraud in recent years is relational and fast. The answer to this is smarter systems: streaming ML for sub-second action, graph-based models to expose hidden networks, and explainability baked into every decision so teams — and regulators — can trust the results.

Saurabh Sahu

CTO, GeekyAnts

- Why real-time matters

- Graph intelligence: finding what rules miss

- Explainability = auditability

- Practical architecture (what teams actually deploy)

- Operational and compliance gains

Switching from manual/rule-only systems to an AI-first approach typically yields: reduced manual review queues, lower false positives, faster investigation times, and clear audit trails. Multiple 2025 field reports and academic studies document measurable reductions in loss and OPEX after AI adoption.

- Caveats & readiness checklist

AI Fraud Detection vs Alternatives

As digital transactions scale, enterprises face a critical decision. Should they rely on rule-based systems, manual reviews, or AI-powered detection? Each approach carries distinct trade-offs in speed, accuracy, cost, and audit readiness.

| Dimension / Metric | Manual Reviews | Legacy Systems | AI-Powered Detection |

|---|---|---|---|

| Complex Pattern Detection | Detects only what humans know; struggles with multi-layer, multi-channel fraud (e.g., synthetic identity, mule rings). Often reactive. | Detects known, predefined patterns; frequent blind spots for new tactics; rule explosion/brittleness. | Learns from data; detects unseen fraud patterns. Graph ML links entities to expose synthetic IDs and coordinated rings |

| Latency and Real-Time Decisioning. | High latency (minutes to hours), depending on workload; real-time is not feasible. | Fast for simple rules (e.g., flag > $X transaction); but as rule volume grows, rule evaluation slows; sometimes leads to batching. Limited ability to scale with volume while maintaining low-latency | Built for streaming: real-time inference in milliseconds, sustaining high TPS at scale. |

| False Positives and Negatives | Human reviewers may over-flag, fatigue leads to misses, and inconsistency; recall is low for subtle fraud rings. | Tends toward either high false positives (if rules are broad) or higher false negatives (if rules are too tight). Must manually maintain thresholds, rules. | Adaptive thresholds, probabilistic scoring, continuous retraining; graph ML cuts false negatives in networked fraud. |

| Scalability (Data, Volume, Channels, Products) | Scaling means more human reviewers; steep human cost; often impractical to expand to new channels quickly. | Software scale but rule maintenance cost grows superlinearly; can’t easily adapt to new data types | Expands horizontally across data types, channels, and regions. Cloud-native models scale automatically with volume. |

| Operational Overhead and Maintenance | Very high: hiring, training, consistency, QA of human work; subjectivity | Medium: creating, testing, deploying rules; false positive tuning; rule conflict resolution; versioning. | Initial setup high; ongoing automation lowers manual review and maintenance. |

| Audit and Regulatory Compliance | Human notes—but often inconsistent; little feature traceability; audit depends on what was documented. | Rules are explicit, so it’s easy to explain when the rule is fired; but compound rules can become opaque; historically difficult to trace multiple rule interactions. | Built-in traceability: model versioning, feature importances, decision lineage; generates regulator-ready audit evidence. |

| ROI Potential | Modest, incremental improvements; high cost per unit of fraud prevented | Good for known risk types; diminishing returns as attackers adapt; often high cost of maintaining rules without new gains | Cuts undetected fraud, manual load, and disputes. High ROI through automation and early pattern detection. |

| Time to Deployment | Slow — hiring/training humans; policies/procedures; scaling takes months. | Faster for simple rules, but complex rule sets take time, especially when integrating new data sources or embedding them in real-time pipelines. | 6–10 week pilot possible; once live, retraining and iteration are near-continuous. |

| Resilience to Fraud | Low — once a fraudster learns the process or human patterns, they can evade. | Moderate — rules get tweaked after attacks, but attackers can find rule holes; rule latency in response is high. | Learns attacker behavior; ensemble + anomaly models detect unseen tactics and hidden links |

| Data Requirements and Feature Richness | Relies on whatever data humans see; often limited to transaction logs, simple flags, and little feature engineering. | Can use features that are easy to define (transaction size, time, geography), but scaling feature richness is hard; limited ability for relational or derived features. | Uses structured and behavioral data, embeddings, and feedback loops for richer signal extraction. |

| Cost Predictability | Ongoing high human cost, unpredictable scaling costs | Software licensing / rule-maintenance costs are more predictable, but can balloon as the rule base grows; hidden costs in false-positives, customer churn. | Higher upfront cost; lower marginal cost per transaction. Predictable at scale. |

| Integration with Broader Risk & Compliance Ecosystem | Often siloed; disconnected from KYC/AML risk scoring, regulatory reporting, and case management. | Moderate: rule outputs may feed into reporting, but less integration with graph network analysis, policy enforcement, and audit trail tooling | Natively connects with AML/KYC, case tools, and reporting systems for unified compliance. |

How AI Fraud Detection Strengthens Compliance Across Financial Regulations

Saurabh Sahu

CTO, GeekyAnts

AI is helping fintechs stay compliant in a landscape where every transaction, identity, and dataset is under scrutiny. From anti–money laundering to data privacy and audit readiness, AI makes compliance faster, more reliable, and more transparent.

1. AML (Anti–Money Laundering)

- Pattern recognition: Detects unusual fund flows, structuring, or layering behaviors across accounts and entities.

- Entity linking: ML models map customer relationships across devices, geographies, and transactions — surfacing hidden networks of suspicious activity.

- Reduced false positives: Smarter anomaly detection lowers noise, ensuring investigators focus on genuine risks.

- Real-time monitoring: AI systems flag high-risk transfers instantly for Suspicious Activity Report (SAR) filings.

2. KYC (Know Your Customer)

- Automated ID verification: AI compares IDs, selfies, and biometric data for instant validation.

- Document fraud detection: Deep learning detects forgeries, tampering, or reused IDs.- Continuous

- KYC: AI triggers re-verification based on behavioral anomalies, not just on onboarding.

3. PCI-DSS (Payment Card Industry Data Security Standard)

- AI threat detection: Monitors payment environments for unusual access or data-exfiltration patterns.

- Anomaly-based intrusion detection: ML models identify deviations in network traffic or endpoint behavior.

- Predictive compliance: AI identifies systems drifting from compliance posture (e.g., outdated encryption).

4. GDPR (General Data Protection Regulation – EU)

- Automated data mapping: AI identifies personal data across systems to ensure lawful processing.

- Anonymization & minimization: AI automatically redacts or pseudonymizes sensitive info before model training.

- Breach detection: AI tools monitor unusual data access and prevent privacy violations.

5. OCC / FINRA (US Office of the Comptroller of the Currency / Financial Industry Regulatory Authority)

| Regulatory Area | How AI Tools Now Enable Audit Readiness | Compliance through Audit-Ready AI Systems | Example Tools / Layers |

|---|---|---|---|

| Model Governance (OCC, FINRA | Every model is logged with version history, input sources, performance metrics, and bias checks. | Model Cards (summaries of how, when, and why a model was built) | Regulators can audit every AI decision and its evolution — no black box. |

| Explainability & Decision Transparency (GDPR, OCC | AI generates human-readable rationales behind automated decisions. | Explainability Reports using SHAP, LIME, or IBM Watson OpenScale | Compliance officers can trace why a transaction was flagged — meeting “right to explanation” and OCC interpretability standards |

| Data Protection & Privacy (GDPR, PCI-DSS) | Sensitive data is tracked, anonymized, and monitored continuously. | Cisco Secure AI Infrastructure + Zia Dashboards | AI systems run on trusted, encrypted networks with visual oversight of every data flow — reducing breach and audit risk. |

| AML / KYC Monitoring | Real-time anomaly detection linked to explainable alert workflows. | Zia Dashboards / AI Ops layers | Every AML alert is timestamped, explainable, and traceable from detection to resolution — ensuring full SAR documentation. |

| Operational Risk Management (OCC / Basel III) | AI monitors model drift, data lineage, and compliance gaps | AI Ops & Compliance Dashboards | Compliance teams see emerging risks in real time instead of post-audit discovery. |

How Fintechs Can Implement AI in Fraud Detection

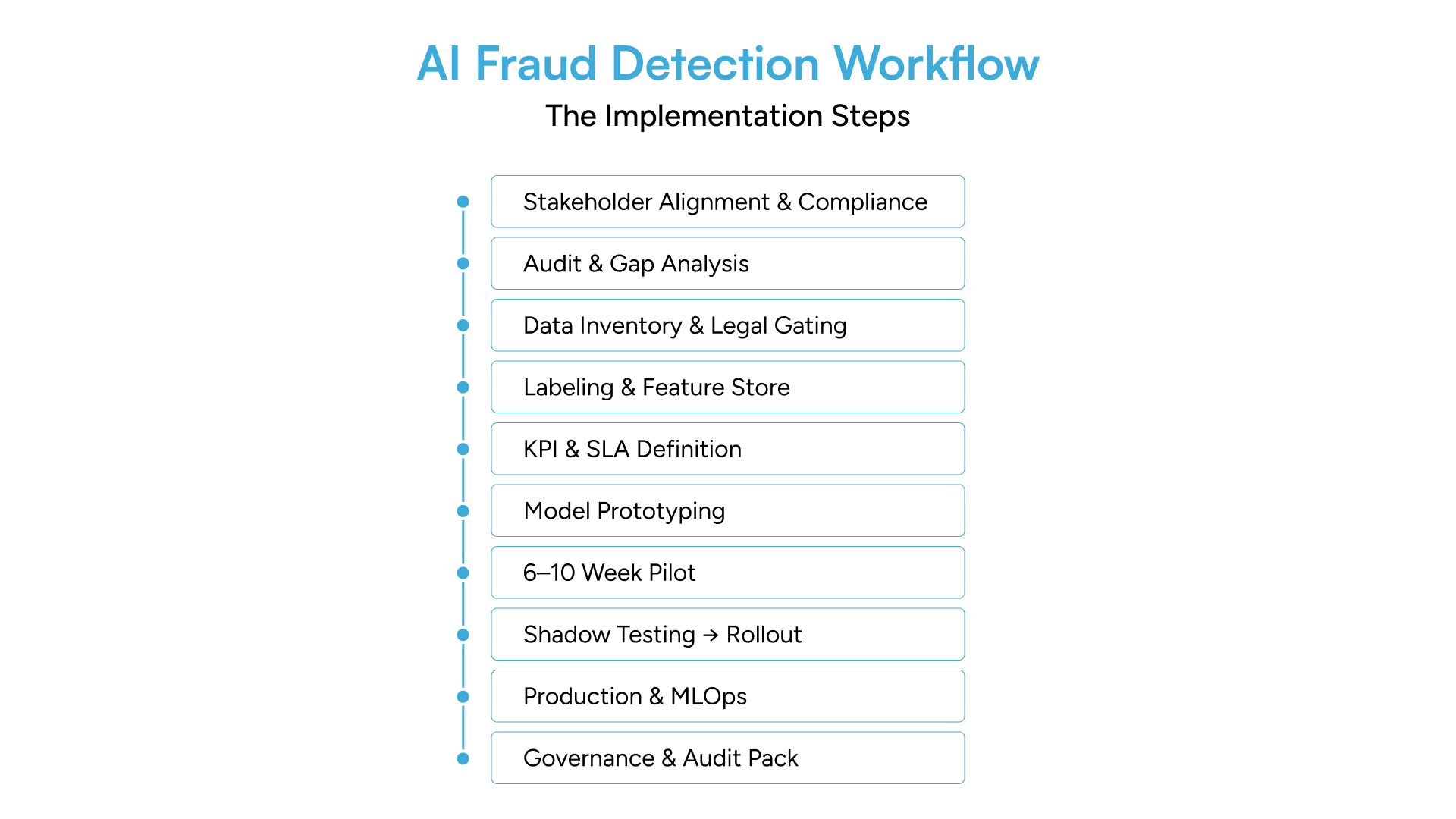

Building production-grade AI fraud detection is a carefully orchestrated journey through compliance and operational validation. Most fintech companies fail at this because they skip critical steps around stakeholder alignment, audit readiness, and proper validation.

The following walks you through the complete process, with one critical recommendation: start with a 6–10 week pilot before scaling to full production. This pilot phase is your insurance policy against costly failures and the foundation for demonstrating ROI to leadership.

Step 1: Align Stakeholders & Get Compliance Sign-Off (Week 0)

Step 2: Audit Current Fraud Detection (Weeks 1–3)

Step 3: Data Inventory & Privacy Gating (Weeks 2–6)

Step 4: Clean, Label & Build Feature Store (Weeks 4–8)

Step 5: Define KPIs & SLAs (Concurrent)

Step 6: Build Baseline Rules & Prototype Models (Weeks 2–6)

Step 7: Run a 6–10 Week Pilot

Step 8: Shadow Testing → Staged Rollout → A/B Testing (Weeks 4–12)

Step 9: Production Deployment & MLOps (Ongoing)

Step 10: Audit Pack, Governance & Partner Support

When and Why to Bring in a Partner for AI Fraud Detection:

Why Choose GeekyAnts for AI Fraud Detection in Fintech Apps?

Saurabh Sahu

CTO, GeekyAnts

At GeekyAnts, we bring together AI innovation and fintech expertise, helping financial products transition from reactive fraud control to real-time, audit-ready risk intelligence.

- Enterprise-grade VO4 BPA Stack: A unified framework combining fraud ML, graph-based detection, XAI, and case management tooling, designed for end-to-end visibility and regulator-friendly traceability.

- 6–10 Week Pilot-to-Production Model: From data handshake to measurable ROI, our engagements are engineered for speed. We deliver working pilots in weeks — not quarters — showing real impact on false positives, detection accuracy, and manual review effort.

- Audit-First Engineering: Every decision, feature, and model version is traceable. Our pipelines generate verifiable audit logs that satisfy compliance reviews and internal risk governance with ease.

- Proven Results with PayPenny: We helped PayPenny implement a streaming fraud detection layer that monitors cross-border money transfers in real time, integrates with their existing KYC stack, and achieves sub-second anomaly detection — all while maintaining full audit readiness.

- Scalable & Compliant by Design: Whether it is AML screening, account takeover prevention, or transaction monitoring, our AI systems scale seamlessly across data sources, products, and jurisdictions.

- Fintech DNA: With deep experience across digital banking, lending, and payments, we design fraud solutions that understand both financial logic and regulatory nuance.