99% Reduction in manual effort | Pillar Engine

Project Type

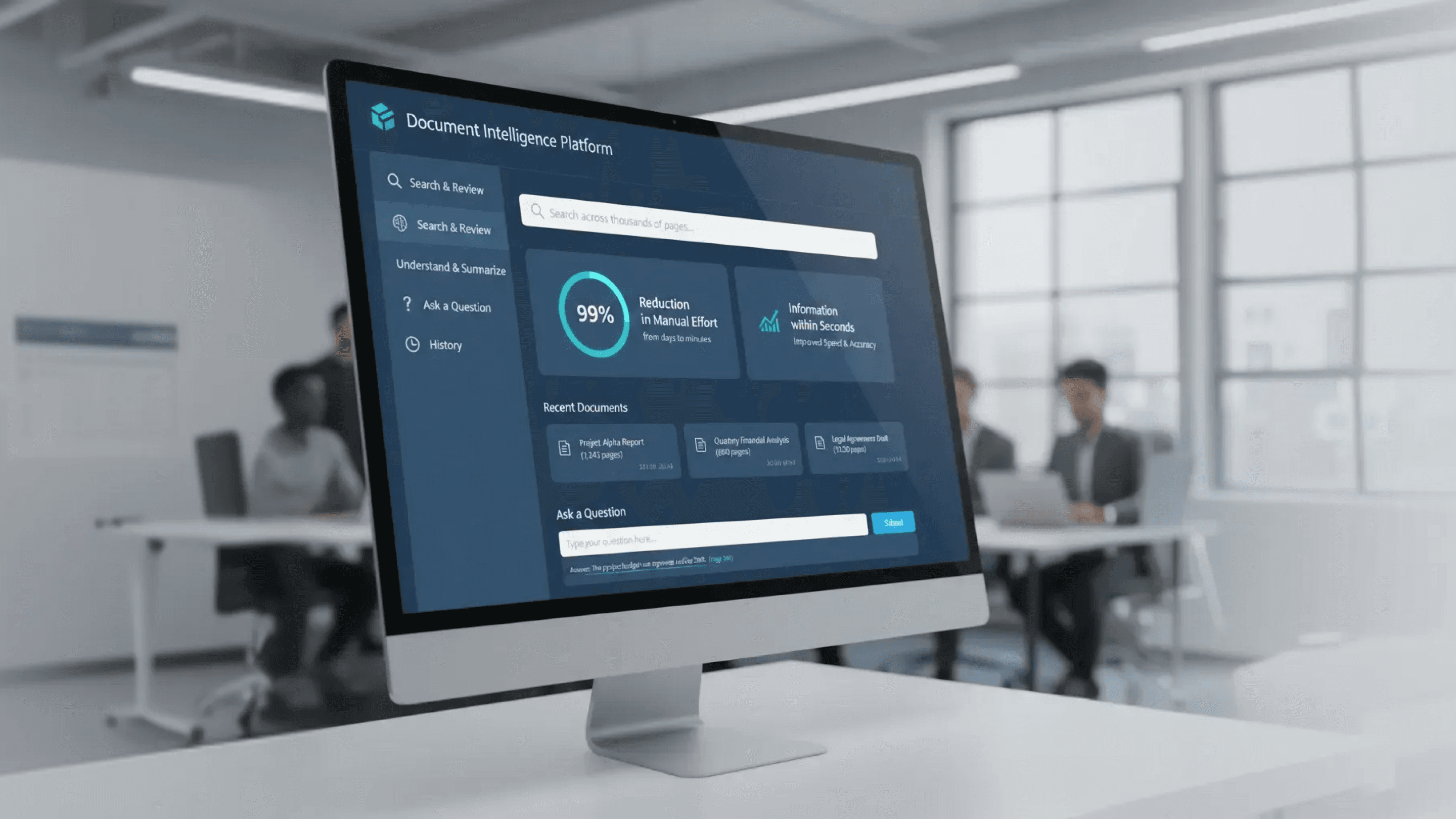

Document Intelligence & Automated Insight Generation Platform

Industry

ABOUT THE CLIENT

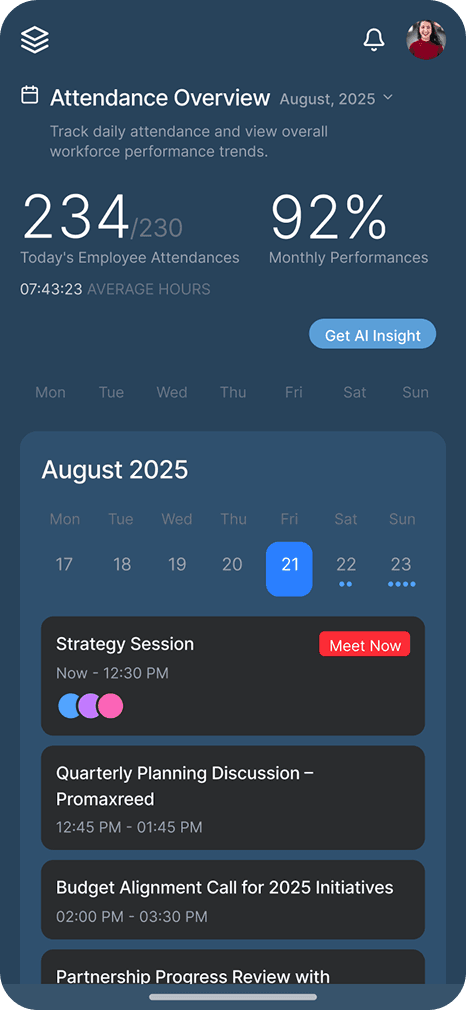

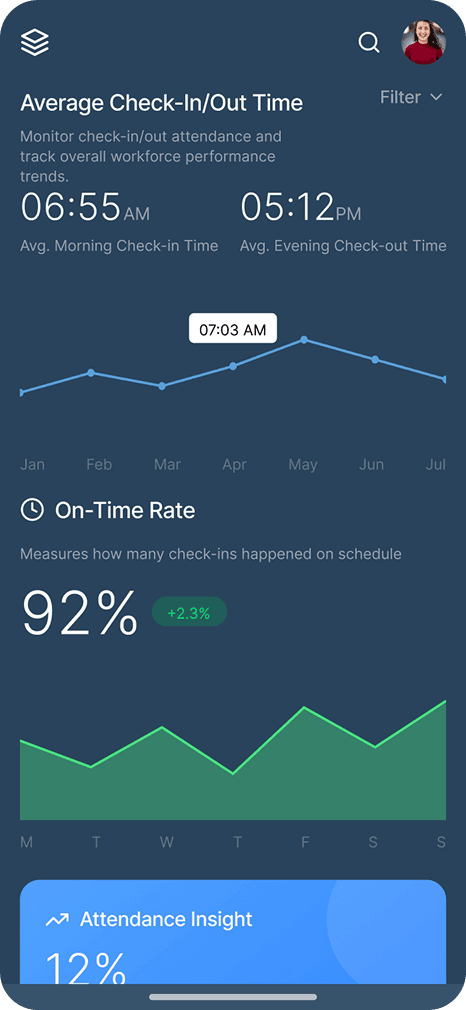

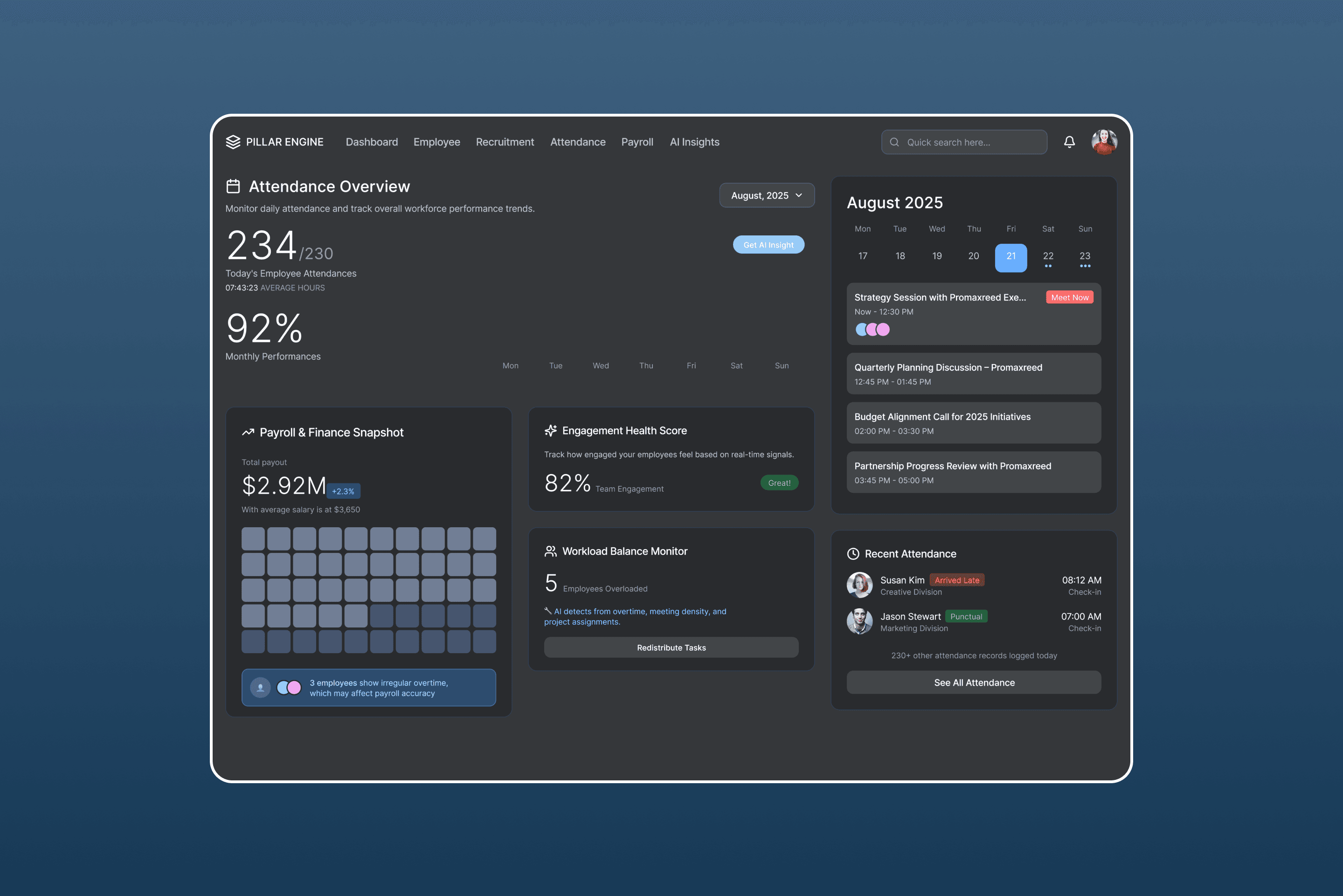

Pillar Engine is a Document Intelligence Platform that transforms how teams interact with large-scale data. By allowing users to search, understand, and review extremely large documents, the platform reduces manual effort from days to minutes. It provides direct answers to user questions and pinpoints the exact page where information exists, ensuring accuracy and reliability across thousands of pages of information.

*All names and logos have been changed to respect the NDA

OVERVIEW

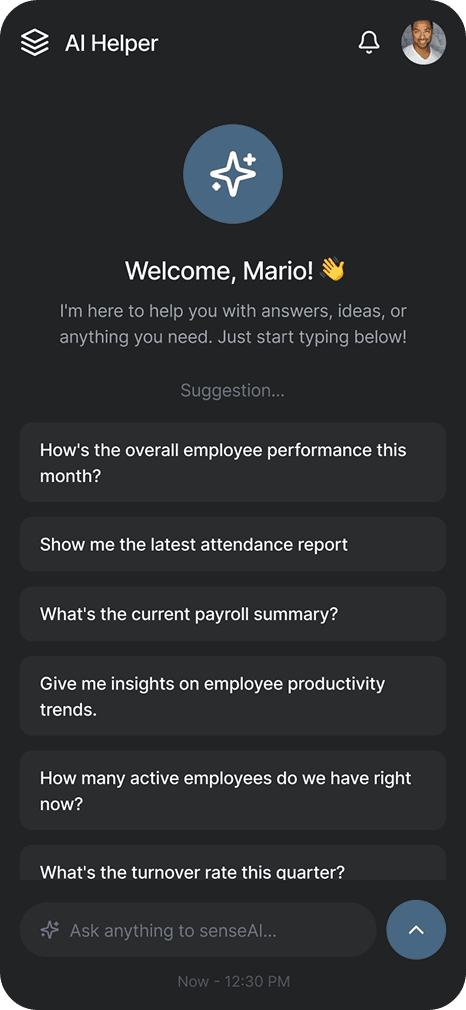

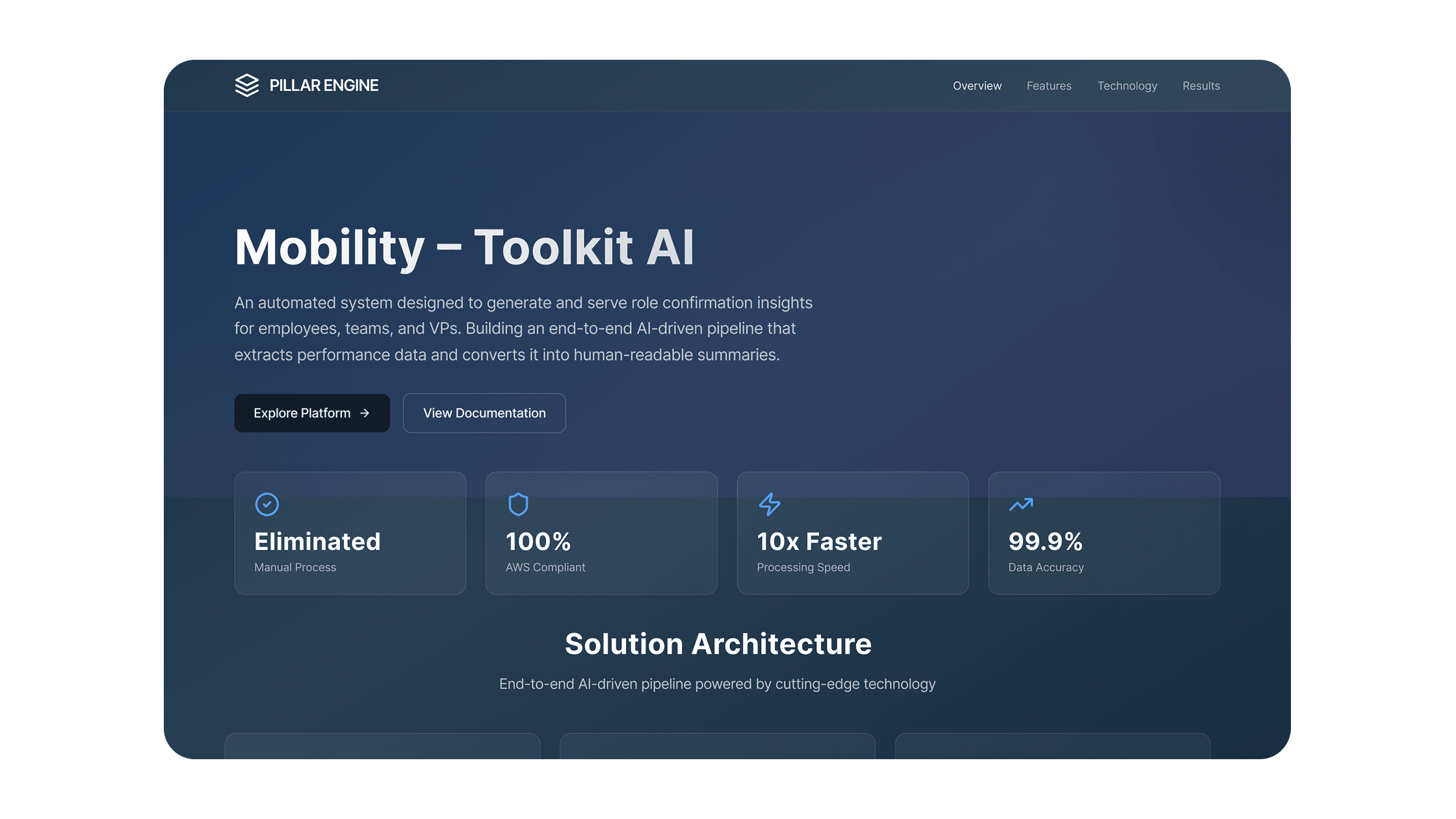

GeekyAnts partnered with Pillar Engine to develop the "Mobility – Toolkit AI," an automated system designed to generate and serve role confirmation insights for employees, teams, and VPs. The project involved building an end-to-end AI-driven pipeline that extracts performance data and converts it into human-readable summaries.

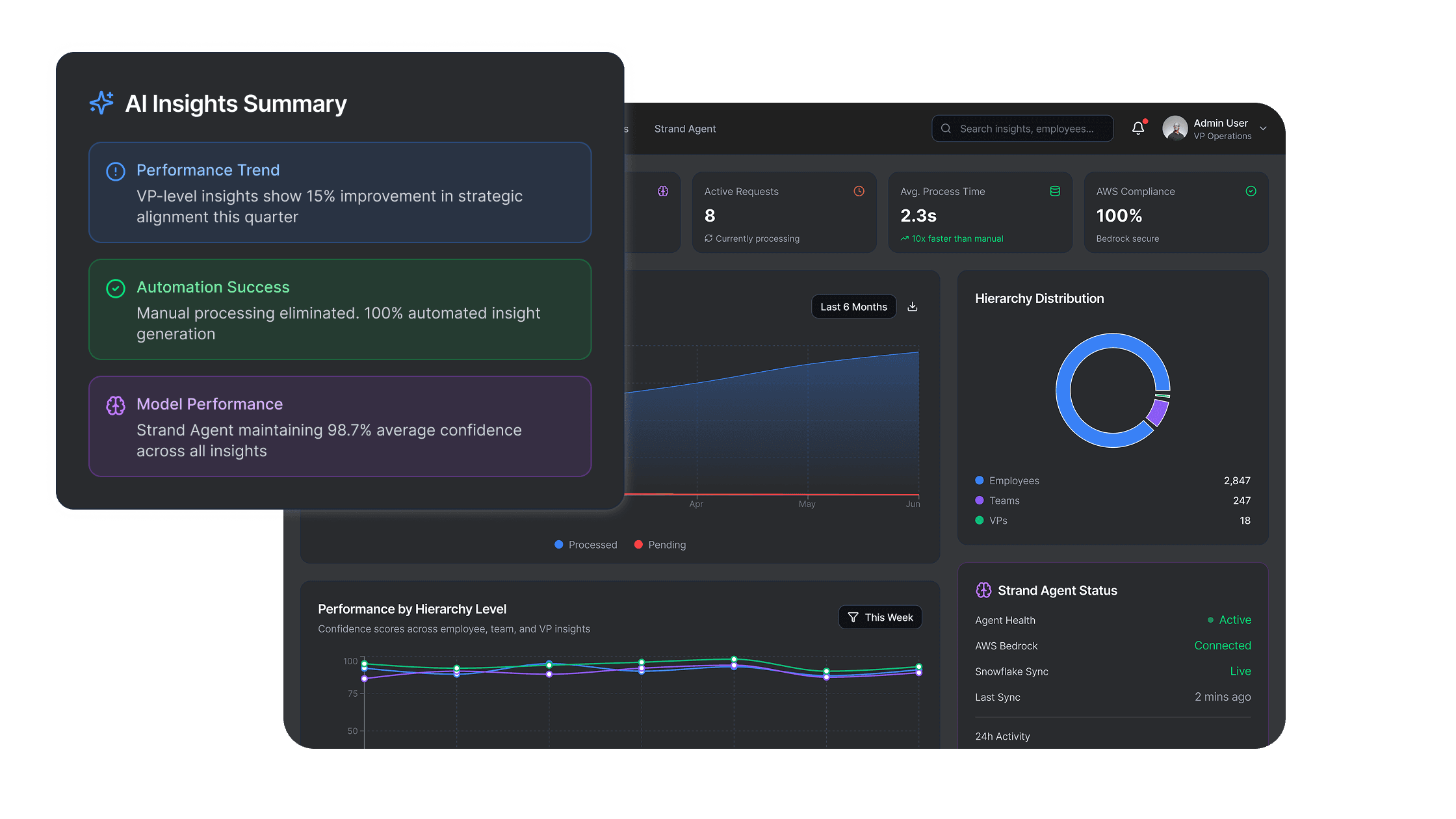

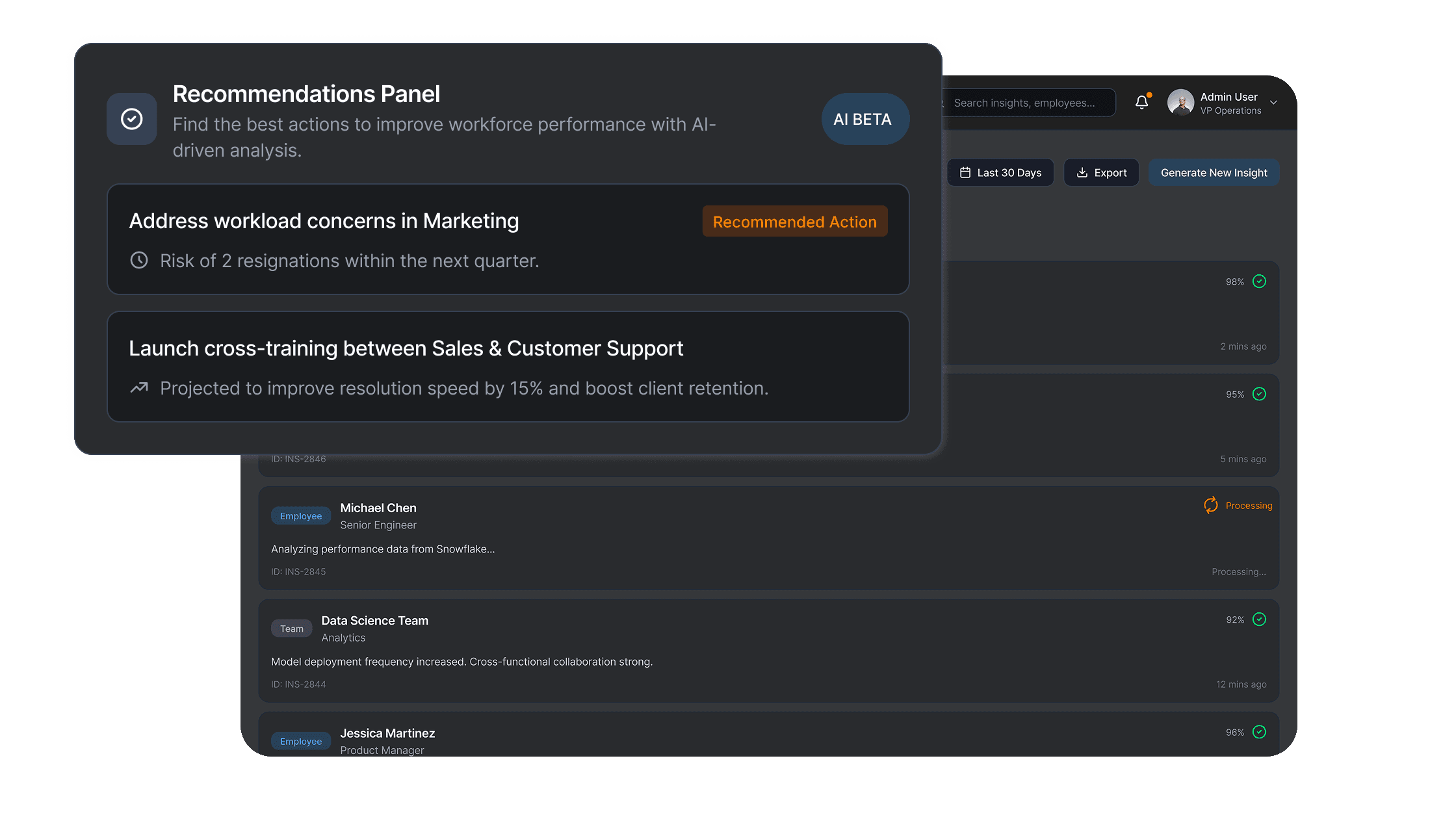

The primary challenge was automating the creation of role confirmation insights from Snowflake data across different hierarchy levels while maintaining strict AWS compliance and scalability. By implementing a custom "Strand Agent" and leveraging AWS Bedrock, the solution successfully replaced a manual, inconsistent process with a secure, automated workflow. This transition has dramatically improved speed, consistency, and decision-making for VP operations within the internal MyBOS platform.

Reduction in manual effort

To process 10K Pages

Accuracy in responses

BUSINESS

REQUIREMENT

The client’s vision was to eliminate the manual creation of text summaries from raw data, which previously led to delays and inconsistencies across business units. The goal was to establish a consistent, insight-driven process for role confirmations on the internal MyBOS platform.

The client needed a scalable solution that could:

- Automate the creation of role confirmation insights from Snowflake data.

- Handle insights for different hierarchy levels, business units, and scopes.

- Generate contextual, narrative text using LLMs within a compliant AWS environment.

Key Requirements

- Creating a fully automated AI-driven insight generation pipeline.

- Delivering weekly refreshed insights across organizational hierarchies.

- Ensuring data-backed summaries using secure, AWS-native components.

SOLUTION

GeekyAnts implemented a robust ETL pipeline and AI agent architecture to automate the insight lifecycle.

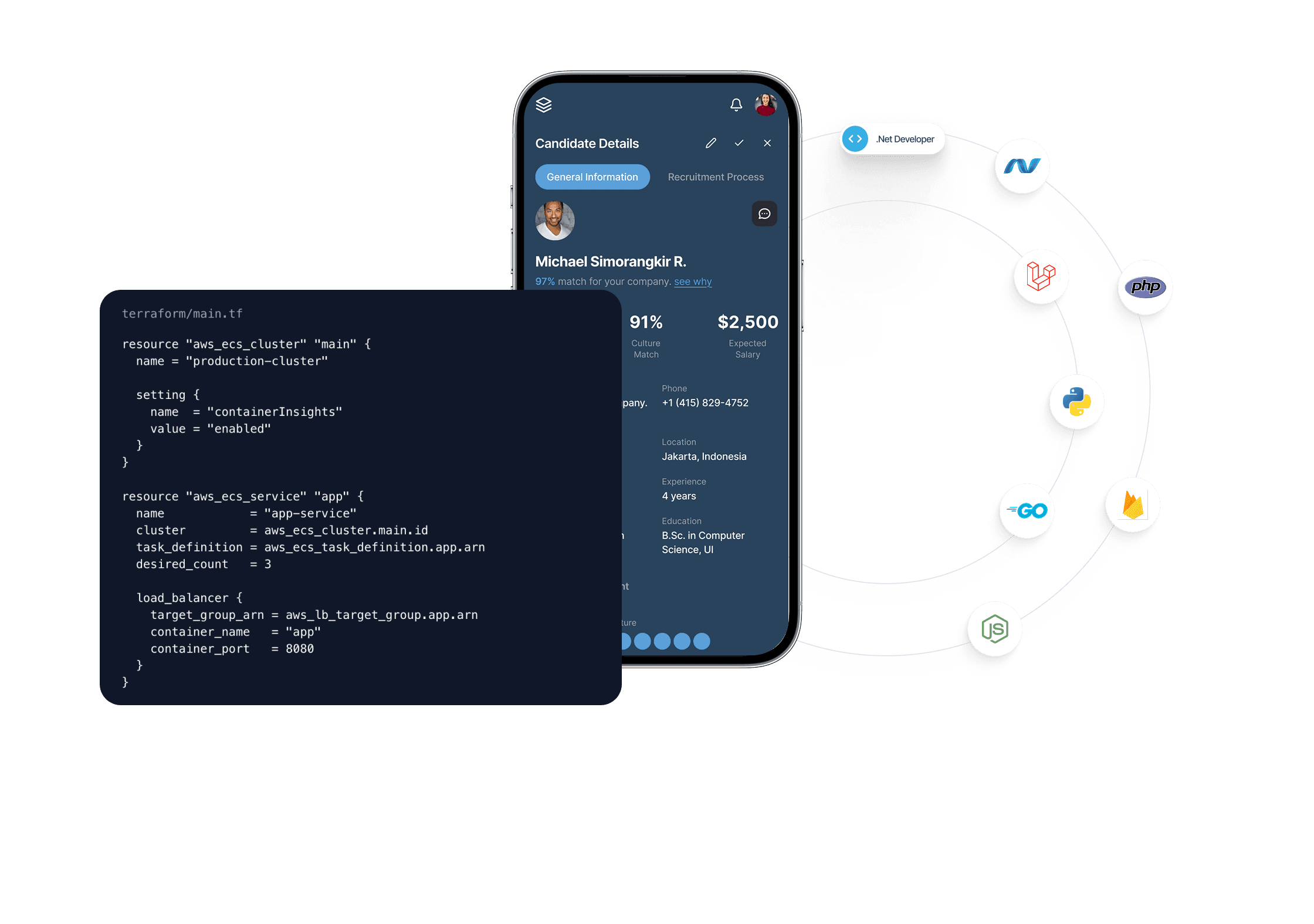

1. Automated Data Extraction: Built an ETL pipeline on AWS ECS (Fargate) triggered by EventBridge to extract data from Snowflake via complex multi-unit queries.

2. AI-Powered Transformation: Developed a "Strand Agent" integrating AWS Bedrock (Claude) to transform raw data into contextual, human-readable narrative text.

3. Low-Latency Serving: Created an Insight Serving Lambda to retrieve generated summaries from DynamoDB and serve them to managers and dashboards instantly.

CHALLENGES

IN EXECUTION

& SOLUTIONS

In building the platform, we addressed the challenge of large data extraction for multiple hierarchy levels by implementing parameterized Snowflake queries with pagination and staged fetch. To resolve Bedrock cross-account access, we configured IAM trust policies between roles to allow secure LLM communication.

We also managed ETL runtime constraints by shifting ETL logic to ECS (Fargate) instead of Lambda for better resource control over long-running jobs. Finally, to ensure insight consistency, we added prompt templates and structured output parsing in the Strand Agent to ensure uniform results.

Accurate Personalization from Limited Data

1

Cultural Relevance of AI Recommendations

2

Handling Unstructured Food Inputs

3

Scalability of AI Workflows

4

OUR APPROACH

To ensure predictable delivery and strong technical foundations, we followed a milestone-driven, discovery-first approach:

- Product discovery & requirement deep-dive

- UX/UI design & rapid prototyping

- AI architecture & recommendation logic design

- MVP development & integration

- Testing, iteration, and launch readiness

Product Discovery & AI Feasibility

- Conducted detailed discussions to understand hierarchy levels, business units, and internal role confirmation scopes.

- Evaluated AWS Bedrock (Claude) for managed LLM access to ensure enterprise-grade IAM integration and compliance.

UX & Experience Design

- Designed the "Insight Serving" flow to ensure summaries are easily accessible to VPs and managers via downstream APIs and dashboards.

- Focused on "human-readable" outputs to ensure the AI-generated text matched the necessary professional tone and accuracy.

AI Recommendation Engine Development

1. Built the Strand Agent as a reusable layer to manage LLM prompts, context construction, and data transformations.

2. Refined prompt templates to ensure consistent insight generation across various employee roles.

Mobile & Backend Engineering

- Selected AWS ECS (Fargate) over Lambda for ETL to handle long-running extraction jobs and complex Snowflake queries.

- Utilized Terraform and Terraform Cloud for consistent infrastructure management and DynamoDB for scalable, low-latency storage.

Step Testing, Iteration & Launch Support

- Verified ECS task logs for data integrity and compared AI insights against human-written samples for tone and accuracy.

- Validated the event-driven architecture to ensure consistent weekly insight refreshes via EventBridge.

PROJECT

RESULTS

The Mobility project established a fully automated AI-driven insight generation pipeline for the MyBOS platform. It ensures weekly refreshed insights across organizational hierarchies and provides consistent, data-backed summaries using secure AWS-native components.

Reduction in manual effort

To process 10K Pages

Accuracy in responses

OTHER CASE STUDIES

Scale-Ready Kubernetes Architecture for Production MVPs | Bespoke

Discover how a lightweight K3s-based Kubernetes setup helped a Nordic retail platform launch fast, stay cloud-agnostic, and scale without overhead.

40% Reduction in onboarding completion time| Dentify

See how Dentify reduced onboarding time by 40% using AI transcription, RAG-powered treatment plans, and a modernized clinical workflow.

3x Faster AI Feature Iteration for Smart Pantry

How GeekyAnts helped Smart Pantry achieve 3x faster iteration on AI-driven features with a scalable, personalized meal recommendation platform.