Table of Contents

Book a call

The following is an excerpt from Tejas’s keynote address at thegeekconf, where he takes us on a deep dive into AI engineering and the potential for anyone to become an AI engineer.

I’m excited to talk to you about something important today. The conference is React Native x Modern Web, and I’m here to discuss the modern web. But today, the thing that unites us all here is AI, specifically generative AI, and I know it’s a hyped topic, but I’m going to strip away the hype today and give you a balanced take on AI engineering. By the end of this, I’m hoping to convince you that you could be an AI engineer, too.

Now, if I asked how confident you are that you could become an AI engineer, what would you say? Show of hands? Okay, a few brave souls. But by the end of this talk, I’m betting more of you will be raising your hands. Why? Because I’m all about eliminating imposter syndrome and helping you realize that you can do your best work and live your best life in tech.

Imposter Syndrome—We’ve All Been There, but It’s Time to Let It Go

So, with that introduction, my name is Tejas—that’s pronounced contagious, but don’t worry, I’m not. Over the years, I’ve worked at various tech companies in different roles—as a consultant, employee, whatever. And I’ve learned from the best minds in the industry. Today, I’m here to share what I've learned with you, not just the things I’ve experienced but also what I’ve learned from the experts.

Currently, I’m a Developer Relations Engineer at DataStax. We do a lot with generative AI and, most importantly, we make a lot of open-source gen AI tools, which is why I joined the company in the first place. I believe in the power of open source, and I believe generative AI can bring some amazing things to life.

But enough about me—let’s get into what we’re really here for AI engineering. Before coming here, I talked to several folks and asked, “Do you feel like you could be an AI engineer?” Just like in this room, about 1% or maybe half a percent said yes, and the rest said, “I don’t know Python, linear algebra, or TensorFlow,” or “I didn’t go to university.”

Guess what? None of that matters. You don’t need to be a genius with machine learning models or have a math degree to get into AI engineering. Let’s eliminate that self-doubt right now.

The Biggest Misconception—AI Engineering ≠ Machine Learning Research.

People make the mistake of confusing AI engineering with machine learning engineering—or worse, with machine learning research. These are totally different things. Research is all about science and theory. Engineering? That’s about applying science to solve real-world problems.

Here’s the big idea—AI engineering isn’t about the complex stuff like training models. It’s about using APIs, solving problems, and bringing machine learning to life in applications.

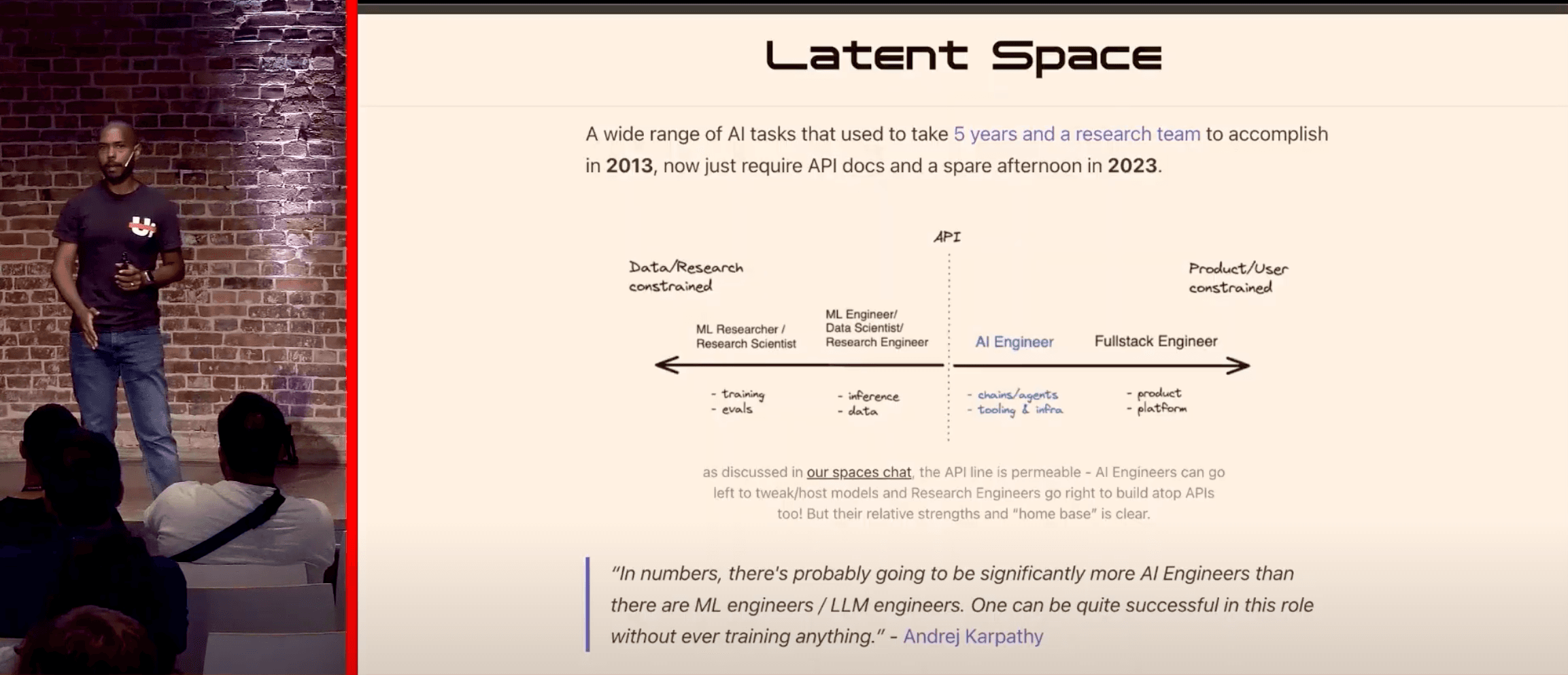

There’s a fantastic blog called Latent Space by my friend Sean Wang. In one of his posts, he talks about the rise of the AI engineer, and there’s this fantastic diagram in there that perfectly illustrates what AI engineering is.

On the one hand, machine learning research focuses on things like model training. Then, you’ve got machine learning engineering—doing things like inference and generating synthetic data. But there’s a line—the API is the dividing line—and after that, you’re in AI engineering territory. Everything after the API is AI engineering.

Spoiler Alert—You’re Already Doing AI Engineering if You’ve Called an API

If you’ve ever made a fetch request in a React Native app to an HTTP API where a large language model is hidden behind it, congratulations! You’ve been doing AI engineering. Sean’s post says it perfectly: AI engineering is about applying tech to solve problems, and if you’re calling APIs and working with machine learning outputs, that’s AI engineering.

There’s another critical point—you don’t need to train models to be successful. Andrei Karpathy, one of the masterminds behind OpenAI and Tesla’s self-driving efforts, has said the same. He believes there will be more AI than machine learning engineers, and you don’t need to train anything to excel.

AI Engineering is Not Just for Experts—It’s for You

Before coming here, I asked several people, “Do you feel like you could be an AI engineer?” Just like in this room, only about 1% said yes. The rest? They said, “I don’t know Python,” or “I didn’t go to university.” But guess what? None of that matters.

You don’t need to be a machine learning genius to become an AI engineer. You don’t need to understand TensorFlow, linear algebra, or model training. Let’s eliminate that doubt today.

Here’s the Bottom Line—You Don’t Need to Train Models to be an AI Engineer

You can be highly successful as an AI engineer without ever touching the complex world of model training. It’s all about problem-solving and integrating machine learning models into apps.

This role is likely going to be one of the most in-demand jobs of the decade. Where there’s hype, there’s opportunity. And trust me, this isn’t like crypto hype. Generative AI has already shown immense value—from medical breakthroughs to transforming learning.

So, let’s dig a little deeper. What exactly is AI? And why is it the most talked-about technology right now?

AI—A Lot More Than Hype, but It’s Not All Magic

There are different kinds of AI. We’ve got rule-based AI, which is essentially a system of if-else statements—a developer writes the rules ahead of time, and the computer simulates intelligence. Remember Pac-Man? That’s rule-based AI. When you eat the little cherries, the ghosts turn blue and run away—that’s all scripted behavior.

Then there’s predictive AI, rooted in a mathematical model called the Markov Chain from 1906. Think of it as helping to predict the next state based on the current one. If you’ve ever used the word suggestions above your phone keyboard, you’ve experienced predictive AI.

But the real star of the show today is Generative AI. It all started with a 2017 paper from Google called Attention Is All You Need. This groundbreaking paper introduced the Transformer model, which powers much of what we see in large language models like GPT.

AI Engineering is Not as Far Out of Reach as You Think

Now, if I asked you how confident you are that you could become an AI engineer, what would you say? Show of hands? Okay, a few brave souls. By the end of this talk, I’m willing to bet more of you will be raising your hands. Why? Because I’m all about eliminating imposter syndrome. My goal today is to help you realize that you can do your best work and live your best life in tech.

Generative AI—The Magic Behind ChatGPT and More, but It’s Not Perfect

The Transformer model allows these systems to pay attention to the parallel context of words, not just one token at a time. This is the key to how large language models generate cohesive text.

But here’s the thing—generative AI is far from perfect. It has major issues, like hallucinations, where it confidently spits out completely wrong information. Ever heard of someone asking Google’s AI how many cigarettes a pregnant woman should smoke, and it said one to three? Yeah, that’s a problem.

And there’s more. These systems have limited memory—they can only hold so much context in a given thread. Even the most advanced models today, like Google’s Gemini, cap out at around 2 million tokens. That might seem like a lot, but it’s just 10 years’ worth of text messages.

So, how do we fix these problems? That’s where AI engineering comes in.

I’m really excited to talk to you about something big today. The conference is React Native x Modern Web, and I’m here to talk about the modern web. But today, what connects us all is AI—specifically generative AI. Now, I know it’s a hyped topic, but I’m not here to talk about the hype. Today, I’m here to give you a real, balanced perspective on AI engineering—and convince you that you could be an AI engineer too.

This is the Future of Engineering—You Don’t Have to Train Models

This is going to be one of the highest-demand engineering jobs of the decade. And where there’s demand, there’s opportunity. We’re seeing real value already—from breakthroughs in healthcare to enhancing learning experiences.

Now, let’s take a deeper dive. What is AI, and why is everyone talking about it?

There are different kinds of AI, but the one getting all the attention today is generative AI. This all started with a 2017 paper by Google called "Attention is All You Need." This is where the transformer model comes from, and it’s the foundation for tools like GPT.

Generative AI is Changing the Game, But It’s Not Perfect

The transformer model is what allows AI to generate coherent text by paying attention to context. But, it’s far from perfect. Ever heard of AI hallucinations? That’s when AI confidently gives you completely wrong information. Google’s AI once said a pregnant woman should smoke one to three cigarettes a day—that’s a massive problem.

And there’s more. Generative AI has a limited memory. Even the best models today can only hold so much context. Google’s Gemini, for example, can handle 2 million tokens. But guess what? Even 2 million tokens aren’t enough for AI to truly understand us. And don’t forget the knowledge cut-off. GPT-3.5’s training data ends in 2023. Ask it for tomorrow’s weather, and it’s clueless.

So, how do we fix this? Enter AI Engineering.

The Game-Changer: RAG (Retrieval Augmented Generation)

There’s a better way to tackle these challenges, and it’s called RAG—retrieval augmented generation. It sounds fancy, but all it means is this: get real data from reliable sources. You pull data from an API, augment it, and use it to improve the model’s output. This method solves hallucination problems, overcomes context length issues, and works around the knowledge cutoff.

No More Hallucinations—RAG Gets You the Truth

RAG pipelines are simple. You need three things: authoritative data, an embedding model, and a vector store. With this setup, you can feed real data into your AI pipeline, ensuring that your model gives you the most accurate and relevant information.

Let me show you RAG in action. At DataStax, we use RAG in a fully open-source tool called LangFlow. It’s powerful and something you can run locally. Now, if you ask a question like, "Who won the Oscar for Best Picture in 2024?" GPT-3.5 won’t know the answer, but through RAG, we can pull data from a reliable source like the British Film Institute and feed it into the pipeline. The result? You get real-time, accurate information.

This is what’s next in AI. But please, don’t build another chatbot. Chatbots are so 2022.

The Future is Generative UI, Not Chatbots

I want to leave you with this breakthrough: Generative UI is where AI meets real user experience. Large language models like GPT can now call functions, meaning they can trigger tools to handle more complex tasks. This opens up a whole new world of possibilities beyond chatbots.

For example, I built a project called Movies++ to solve my frustration with Netflix’s search functionality. Using generative UI and RAG, I built an experience where you can search for movies, watch trailers, and get reviews—all in a seamless, interactive UI.

Generative UI is the future. It allows for interactive, intelligent interfaces that adapt to users’ needs.

Generative AI is hyped, and for a good reason. You can be an AI engineer if you can call an API that hides a large language model behind it. I invite you to join this space. There’s room at the table for everyone. Thanks so much for having me, and I can’t wait to see what you’re going to build next!

Dive deep into our research and insights. In our articles and blogs, we explore topics on design, how it relates to development, and impact of various trends to businesses.