MCP in Action: A Developer's Take on Smarter Service Coordination

Author

Date

Book a call

How building a comprehensive MCP server ecosystem transformed our AI agents from isolated language models into powerful, connected systems

The AI Agent Limitation Problem

When we first started building AI-powered services for our organization, we quickly hit a fundamental wall. Our AI agents were incredibly sophisticated at language understanding and generation, but they existed in a vacuum. They could process and analyze information beautifully, but they couldn't:

- Search the web for real-time information

- Access our internal tools like Jira or GitHub

- Read files from our shared drives

- Query our databases

- Interact with our productivity systems

This limitation meant our AI agents were like brilliant researchers locked in a library with only outdated book¶s. They had impressive analytical capabilities but no way to access current information or take meaningful actions in our digital ecosystem.

The traditional approach to solving this problem was to build separate, custom tools for each integration we needed. Want your AI to search the web? Build a custom web search wrapper. Need GitHub integration? Create a bespoke GitHub API client. Database access? Write another custom connector. Each integration meant:

- Months of development time building custom API wrappers and authentication systems

- Complex maintenance overhead as APIs changed and authentication methods evolved

- Inconsistent interfaces across different tools, making it difficult for AI agents to learn and adapt

- Security vulnerabilities from implementing authentication and data handling from scratch

- Tight coupling between our AI logic and specific service implementations

We faced the classic integration complexity problem that has plagued software development for decades – but amplified by the unique challenges of connecting AI models with various tools and data sources. Every new service we wanted to integrate required starting from scratch, and the cognitive load on our development team was becoming unsustainable.

Enter Model Context Protocol (MCP): Breaking Down AI's Walls

Model Context Protocol, developed by Anthropic, addresses this exact problem. MCP is a standardized protocol that allows AI assistants to securely connect to various data sources and tools, dramatically expanding their capabilities beyond their core language processing.

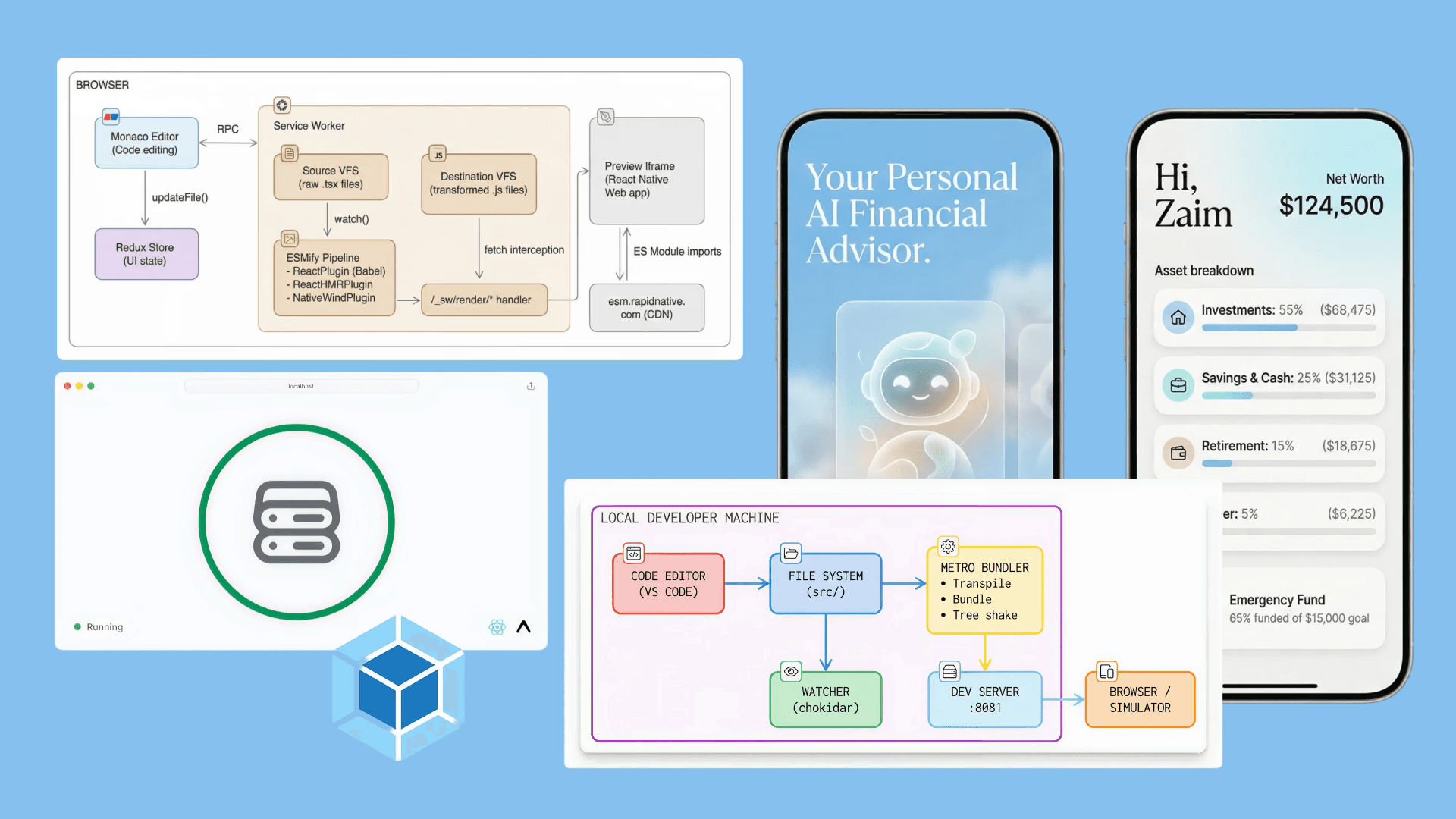

The protocol works on a simple but powerful premise: instead of building monolithic AI systems that try to do everything internally, we can create modular "servers" that each provide specific capabilities – web search, file access, database queries, API integrations – and allow AI agents to dynamically discover and use these capabilities as needed.

This modular approach is revolutionizing how we think about AI system architecture. Rather than building isolated AI applications, we're creating AI agents that can orchestrate and coordinate with an entire ecosystem of digital tools and services.

Industry Context: The Rise of Agentic AI

The industry is rapidly moving toward agentic AI systems – AI that can take actions and complete complex tasks rather than just answering questions. This shift represents one of the most significant developments in AI since the emergence of large language models.

However, the transition from conversational AI to agentic AI requires solving the connectivity problem. AI agents need to interact with real-world systems, and MCP provides the standardized infrastructure to make this possible at scale.

Major organizations are recognizing that the competitive advantage lies not just in having powerful AI models, but in how effectively those models can integrate with existing business systems and workflows. MCP is emerging as the de facto standard for this integration layer.

Building Our MCP Server Ecosystem

Rather than continuing down the path of custom integrations, we discovered Model Context Protocol and realized it provided exactly the standardized approach we needed. Following the official MCP development process, we started building our ecosystem of connected services.

The MCP Development Process

The beauty of MCP lies in its standardized development approach. According to the official documentation, building MCP servers follows a consistent pattern:

- Project Setup: Initialize your project with the appropriate MCP SDK (TypeScript, Python, C#, etc.)

- Server Implementation: Define the capabilities your server will expose — tools, resources, and prompts

- Build & Package: Compile your server into an executable

- Configuration: Add your server to an MCP host configuration (like Claude Desktop)

- Testing & Iteration: Test with real AI agents and refine your implementation

This standardized process meant we could focus on business logic rather than protocol implementation. Each server we built followed the same pattern, making development predictable and maintainable.

Example: Building a Web Search MCP Server

Let me walk through building our Brave Search MCP server to illustrate how straightforward this process became.

The core implementation exposes a single tool that AI agents can discover and use:

const server = new Server(

{ name: 'brave-search-server', version: '1.0.0' },

{ capabilities: { tools: {} } }

);

// Define what tools this server provides

server.setRequestHandler(ListToolsRequestSchema, async () => ({

tools: [{

name: 'brave_search',

description: 'Search the web using Brave Search API for current information',

inputSchema: {

type: 'object',

properties: {

query: { type: 'string', description: 'Search query' },

count: { type: 'number', description: 'Number of results (1-20)', default: 10 }

},

required: ['query']

}

}]

}));

// Handle tool execution

server.setRequestHandler(CallToolRequestSchema, async (request) => {

if (request.params.name === 'brave_search') {

const { query, count = 10 } = request.params.arguments;

const braveAPI = new BraveSearchAPI({ apiKey: process.env.BRAVE_API_KEY });

const results = await braveAPI.webSearch({ q: query, count });

return {

content: [{

type: 'text',

text: JSON.stringify({

query: results.query?.original || '',

resultsCount: results.web?.results?.length || 0,

results: results.web?.results?.map(result => ({

title: result.title,

url: result.url,

description: result.description

})) || []

}, null, 2)

}]

};

}

throw new Error(`Unknown tool: ${request.params.name}`);

});

Once configured in Claude Desktop, our AI agents immediately gained web search capabilities. They could now search for current events, find real-time pricing data, research competitors, and verify facts – transforming them from isolated models into connected, informed agents.

Scaling the Pattern

What made this approach so powerful was its scalability. Once we had built our first MCP server, building additional servers followed the exact same pattern. We quickly developed servers for:

- Filesystem operations for document analysis and content generation • GitHub integration for code repository management and analysis • Database connectivity for querying internal data sources • Email access through Gmail API for customer support workflows • Project management via Jira integration for development coordination

Each server took days rather than months to develop, and they all followed the same consistent interface patterns. Our AI agents could discover and use new capabilities automatically as we added them to the ecosystem.

Building the AI Orchestration Layer

With our MCP server ecosystem in place, we built an AI agent service that can dynamically discover and coordinate these capabilities. This service acts as the "brain" that determines which MCP servers to use for different tasks.

class MCPOrchestrationService {

private mcpClients: Map<string, MCPClient>;

private capabilityRegistry: CapabilityRegistry;

async executeTask(taskDescription: string, context: TaskContext): Promise<TaskResult> {

// Use AI to analyze the task and create an execution plan

const executionPlan = await this.taskPlanner.createPlan(

taskDescription,

this.capabilityRegistry.getAvailableCapabilities(),

context

);

const taskResults: StepResult[] = [];

for (const step of executionPlan.steps) {

try {

const result = await this.executeStep(step, taskResults);

taskResults.push(result);

// Update context with results for subsequent steps

context.previousResults = taskResults;

} catch (error) {

// Handle step failure with fallback strategies

const fallbackResult = await this.handleStepFailure(step, error, taskResults);

taskResults.push(fallbackResult);

}

}

return {

success: taskResults.every(r => r.success),

results: taskResults,

executionPlan,

totalDuration: Date.now() - executionPlan.startTime,

};

}

}

This orchestration layer enables our AI agents to handle complex, multi-step workflows that require coordination across multiple systems.

Real-World Impact: From Limited AI to Comprehensive Automation

The transformation from isolated AI agents to MCP-connected systems has been dramatic. Let me share some concrete examples of how this has changed our capabilities.

Research and Analysis Workflows

Before MCP: Our AI agents could analyze documents we manually provided, but they couldn't gather current information or access our knowledge bases.

After MCP: AI agents can now conduct comprehensive research by:

- Searching the web with Brave/DuckDuckGo for current information

- Accessing our internal documentation in Google Drive

- Querying our knowledge bases through the AWS KB retrieval server

- Cross-referencing information from multiple sources

- Generating reports with up-to-date citations

Example Task: "Analyze the competitive landscape for our new product feature"

The AI agent:

- Uses Brave search to find recent competitor announcements.

- Accesses our internal competitive analysis documents via Google Drive

- Queries our customer feedback database via the SQLite server

- Searches GitHub for relevant open-source implementations

- Compiles a comprehensive analysis with current market data

Development and Project Management

Before MCP: AI could help with code review and documentation, but had no visibility into our actual projects or repositories.

After MCP: AI agents can now:

- Monitor Jira projects and identify bottlenecks

- Analyze GitHub repositories and suggest improvements

- Cross-reference code issues with project timelines

- Automate routine project management tasks

Example Task: "Identify development blockers for the Q1 release"

The AI agent:

- Queries Jira for all Q1 release tickets and their status

- Analyzes GitHub for related pull requests and code reviews

- Checks Slack for team discussions about specific issues

- Identifies patterns in blocked tickets and suggests solutions

- Creates a priority-ranked list of actions needed to unblock development

Technical Challenges and Lessons Learned

Building a comprehensive MCP ecosystem presented several challenges that required innovative solutions.

Challenge 1: Server Discovery and Health Management

With dozens of MCP servers, we needed robust systems for discovering available servers and handling failures gracefully. We solved this with a dynamic server registry that auto-discovers servers and performs health checks, ensuring our AI agents can always find healthy servers for required capabilities.

Challenge 2: Security and Access Control

Giving AI agents access to sensitive systems required careful security considerations. We implemented multi-layer security with user permissions, rate limiting, argument sanitization, and comprehensive audit logging. Every server validates access requests and sanitizes inputs to prevent security vulnerabilities.

Key Lessons Learned

Start Small and Iterate: We initially attempted to implement MCP across our entire platform simultaneously, which proved overwhelming. Our most successful approach was starting with a single, high-value use case and gradually expanding.

Invest in Schema Design: Context schemas are the foundation of your MCP implementation. Establishing clear governance processes for schema evolution pays enormous dividends later.

Design for Failure: Context retrieval will occasionally fail, and your services must be designed to handle these failures gracefully with fallback strategies and degraded modes

Industry Impact and Future Implications

Our MCP implementation has proven that the future of AI isn't about building more powerful isolated models, but about creating intelligent systems that can seamlessly integrate with existing digital infrastructure.

Competitive Advantages Realized

Reduced Development Time: New AI-powered features that previously took weeks to implement can now be built in days by connecting to existing MCP servers.

Improved System Reliability: The modular architecture means failures are contained. If our email integration fails, our AI agents can still access files, search the web, and manage projects.

Enhanced AI Capabilities: Our AI agents can now handle complex, multi-step workflows that require coordination across multiple systems, unlocking entirely new categories of automation.

Future Developments

Based on our experience, we see several exciting opportunities:

Cross-Organization MCP Networks: Imagine MCP servers that can securely share capabilities across organizations, creating networks of AI-accessible services.

Industry-Specific MCP Libraries: Specialized MCP server collections for healthcare, finance, manufacturing, and other domains.

AI-Optimized APIs: Services designed from the ground up to work optimally with AI agents through MCP, rather than traditional human-facing interfaces.

Conclusion: The Connected AI Future

Building our comprehensive MCP server ecosystem has fundamentally transformed our relationship with AI. We have moved from using AI as an advanced chatbot to deploying AI agents that can actively participate in our business processes, access our systems, and coordinate complex workflows.

The key insight from our journey is that the value of AI isn't just in the sophistication of the language model, but in how effectively that model can interact with the digital world around it. MCP provides the standardized infrastructure to make this interaction seamless, secure, and scalable.

For organizations considering similar implementations, my advice is to start with high-value, well-defined use cases and gradually expand your MCP ecosystem. The modular nature of MCP servers makes this incremental approach very effective – each new server you add multiplies the capabilities of your entire AI system.

The future belongs to AI systems that can seamlessly integrate with our existing digital infrastructure. MCP provides the foundation for building that future, and our experience proves that the technology is ready for production use today.

As we continue to expand our MCP ecosystem, we're not just building better AI tools – we're creating a new paradigm for human-AI collaboration where AI agents can work alongside us as true digital team members, with access to the same tools and information we use in our daily work.

The connected AI future is here, and MCP is the protocol that makes it possible.