Table of Contents

The AI Race: What Google Gemini Means For AI’s Future

Author

Subject Matter Expert

Date

Book a call

Google's Gemini is the new sensation in the AI realm. In a recent blog post, Google CEO Sundar Pichai called Gemini their "most capable and general model yet, with state-of-the-art performance across many leading benchmarks." Demis Hassabis, CEO and Co-Founder of Google DeepMind asserts that Gemini can "generalize and seamlessly understand, operate across, and combine different types of information, including text, code, audio, image, and video."

While these are lofty claims, watching the demonstration videos reveals that Google is on the path to substantiating them with authority.

Here is our analysis of the recent developments and insights into what they signify for the future of AI.

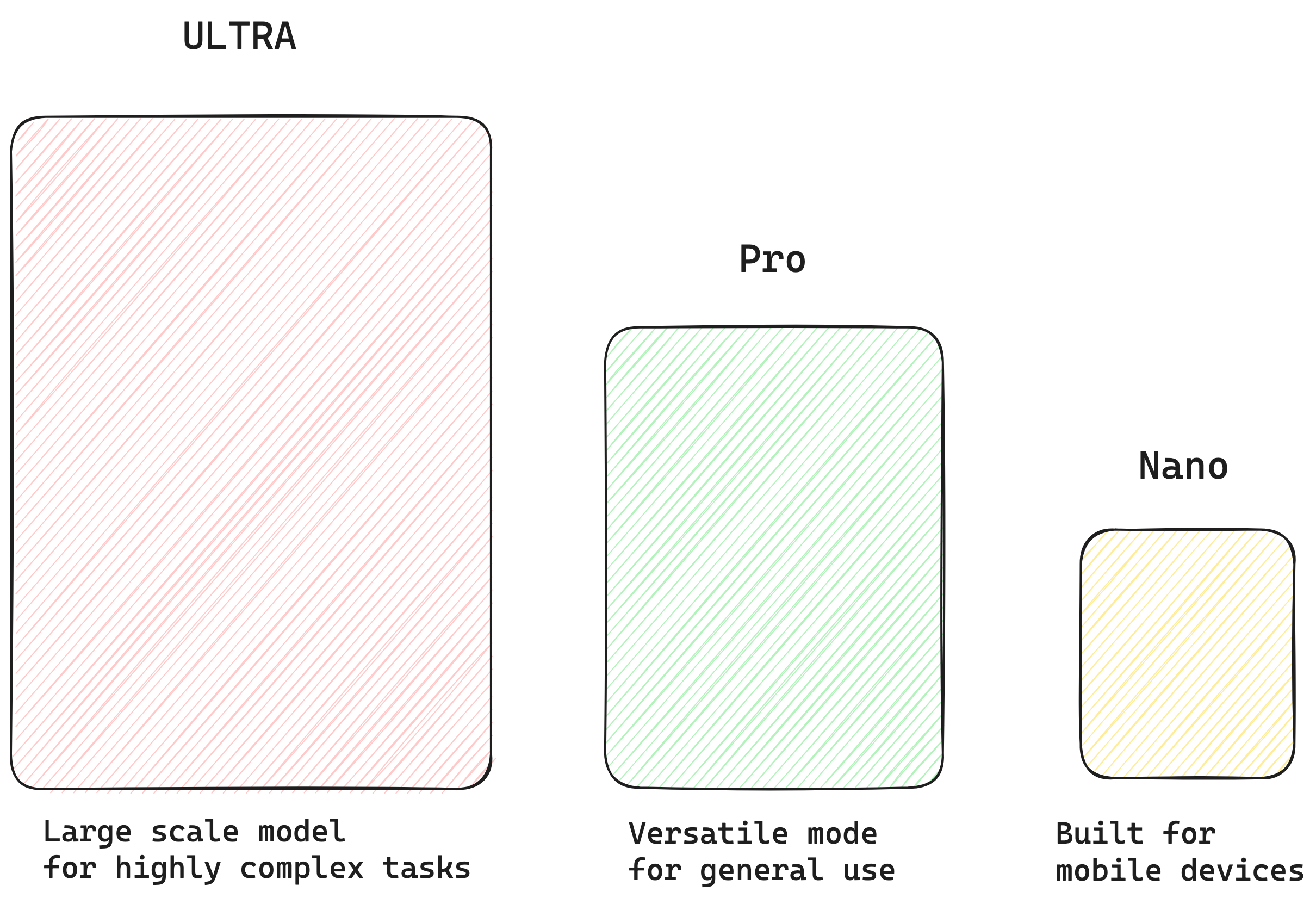

Gemini’s Many Faces — Three Models for Different Tasks

The release includes three main versions of Gemini — Ultra, Pro, and Nano. Each has its use case and capabilities. Ultra is a large-scale model for highly complex tasks, Pro is for general usage, and Nano is built for mobile devices like the Pixel 8 Pro.

“Looks like Google is bringing in its concept of one account for every platform and device to the world of AI. This paints a future where AI usage is diversified and not limited to working professionals or research laboratories. Organizations and companies will find it easier to bring AI capabilities to their apps without performance loss as models like Gemini Nano are built for specific use cases,” according to GeekyAnts CTO Saurabh Sahu.

Gemini’s Usability in the Real-world — Application Set is Huge

Gemini has showcased remarkable capabilities across various use cases in five key areas:

Multimodal dialogue

Multilingualism

Game Creation

Visual Puzzles

Making Connections

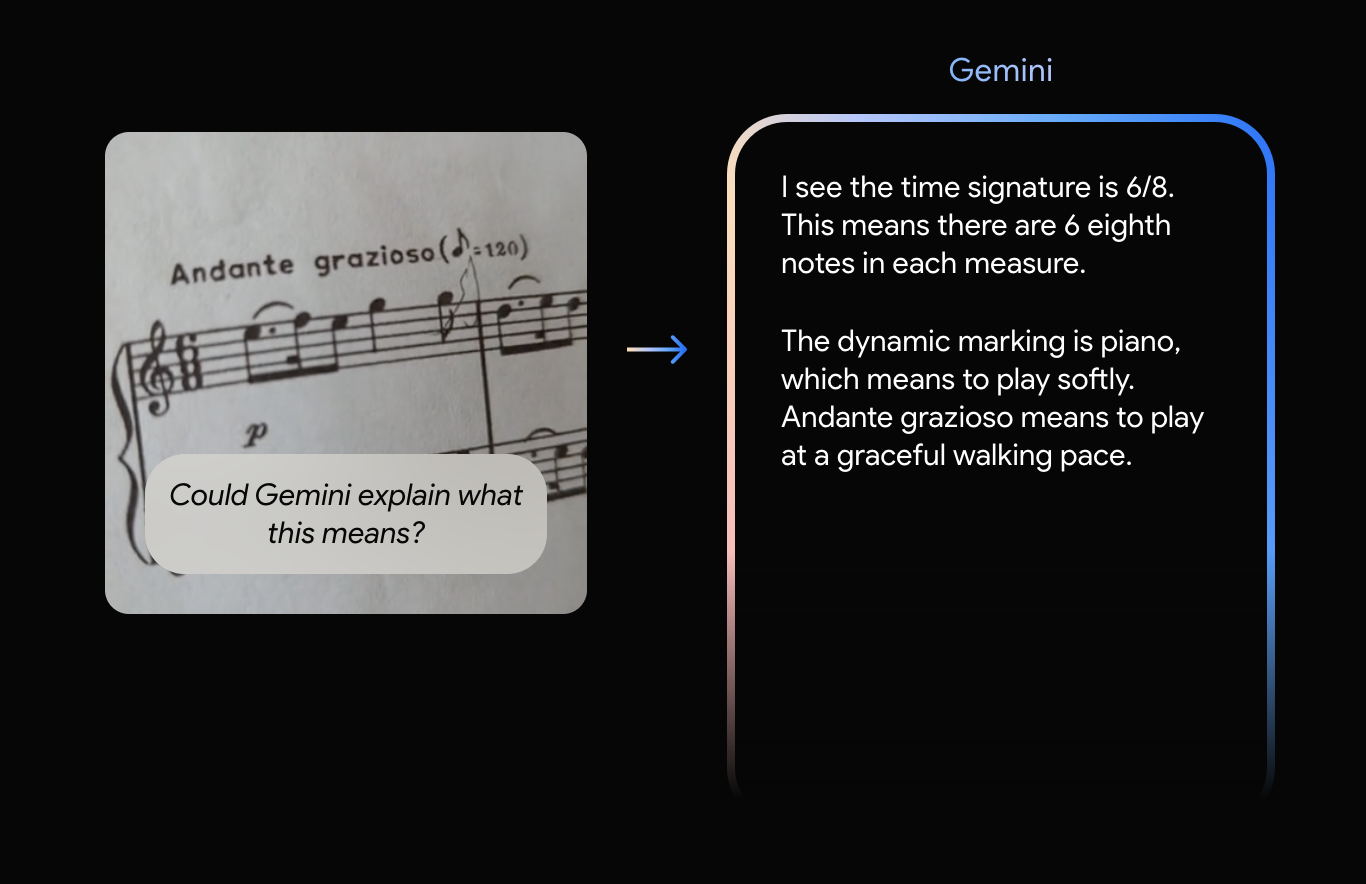

For instance, Gemini can interpret sheet music time signatures and clarify tempo meanings. Imagine the impact on learning and knowledge sharing. Complex equations will be easier to explain, reducing communication gaps. Integrating this with multilingual text translations will establish a new global collaboration standard.

Photo Courtesy: deepmind.google/technologies/gemini

According to Google's official announcements, there are five use cases for this technology. They are as follows:

- Excelling at competitive programming

- Unlocking insights in the scientific literature

- Understanding raw audio signal end-to-end

- Explaining reasoning in math and physics

- Reasoning about user intent to generate a bespoke experience

The list will likely grow with upcoming updates.

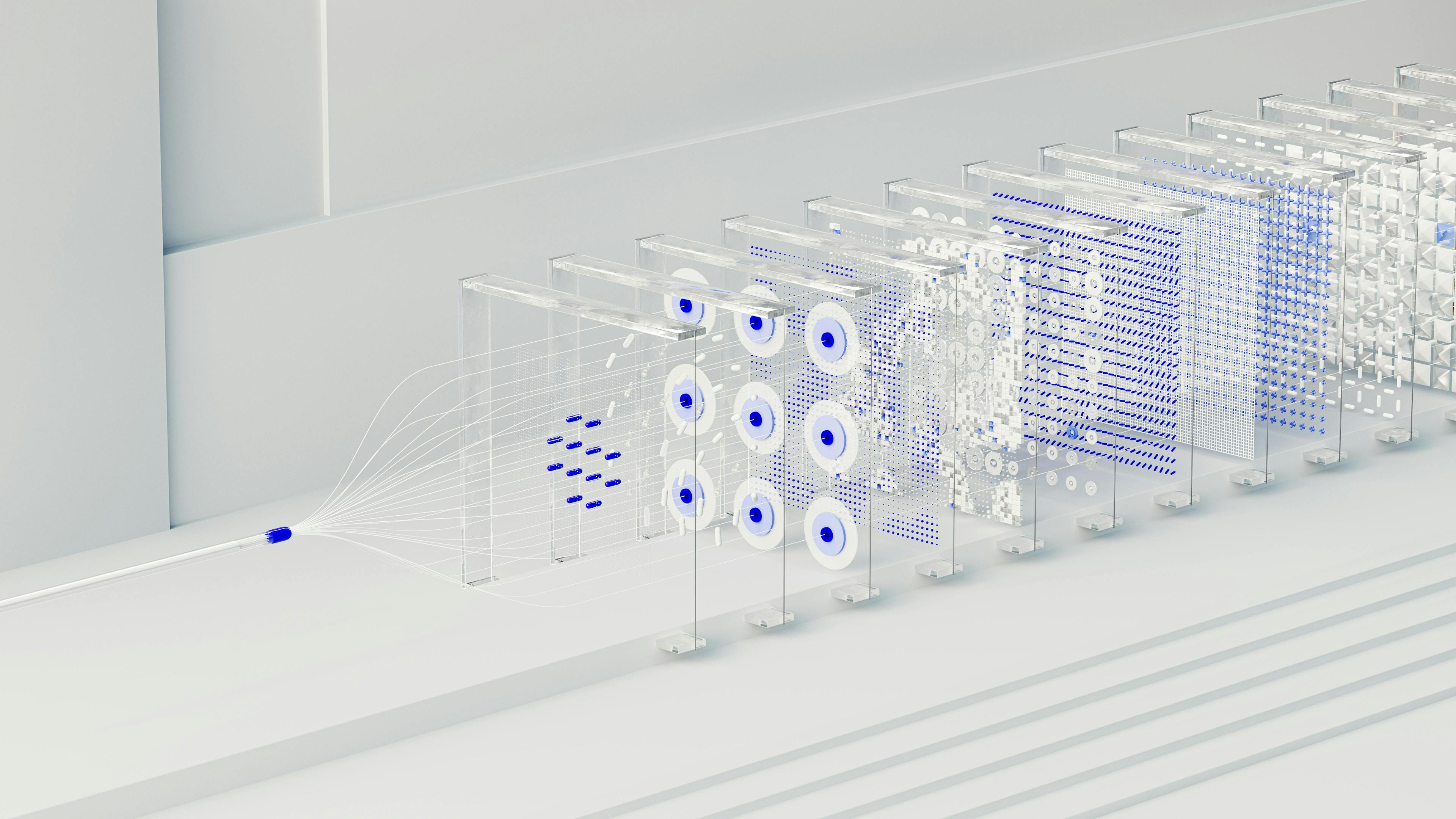

From Concept to Code, Gemini is Methodical

Gemini commences its process by evaluating overall requirements, focusing on broader aspects before delving into detailed reasoning and transitioning from data to coding. Priyamvada, a Software Engineer at GeekyAnts who leads numerous projects on AI models and generative AI, shares her first impressions:

The inner workings of Gemini are extremely methodical. It begins by questioning the necessity of a user interface and, if required, determines whether a text-based prompt would be the most effective approach. Gemini evaluates the complexity of the request, considering whether it calls for a structured presentation of substantial information. It then assesses its understanding and, if necessary, asks additional questions for clarification.

This process helps Gemini ascertain if it has gathered sufficient data to move forward while noting any remaining uncertainties. Subsequently, Gemini drafts a Product Requirement Document (PRD) outlining the envisioned functionalities. The PRD serves as a guide to craft an optimal user experience, considering users may want to browse options and investigate specifics. This leads to the creation of a list and a detail-oriented layout. Gemini then develops the interface using Flutter, incorporating widgets and functionalities.

Ultimately, it gathers the necessary data to bring this experience to life, allowing users to interact and seek further information. The result is a visually rich interface with dropdown menus and step-by-step guides.

Source: Google DeepMind

Gemini efficiently processes audio signals from start to finish, leveraging its inherent multimodal functionalities. It distinguishes between various pronunciations of the same words. With its native integration of visual, textual, and auditory elements, Gemini effectively comprehends and synthesizes these modalities. Imagine improved translation of commands into different formats on both phones and larger screens.

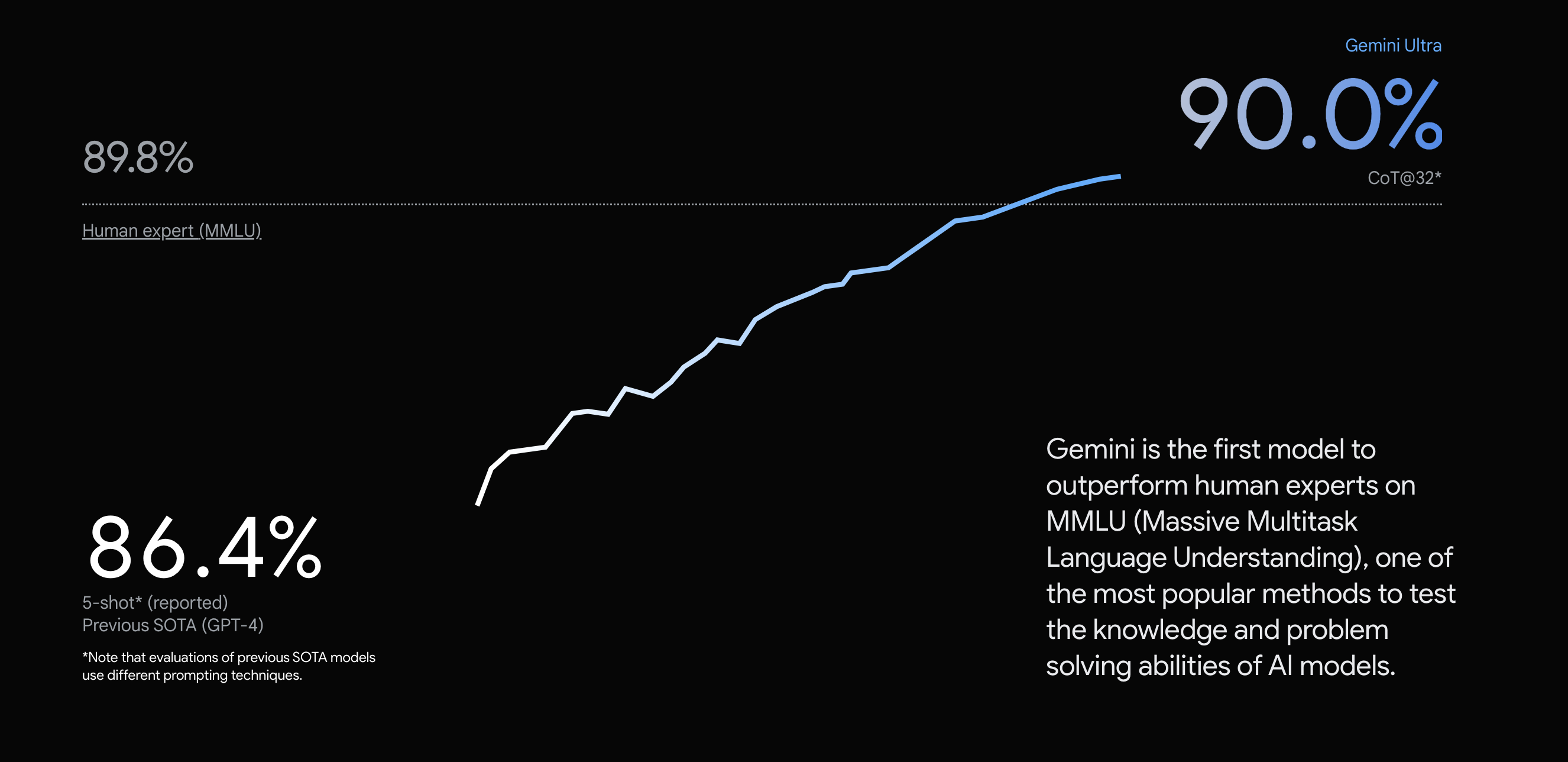

Benchmarks with ChatGPT and SOTA Metrics — It's Promising

Gemini's benchmark results are promising, leading in various parameters and surpassing state-of-the-art (SOTA) performance in multimodal tasks.

Source: deepmind.google/technologies/gemini/#capabilities

However, Gemini’s real test begins on December 13, when Google opens the gates to Gemini Pro via the Gemini API. It can be accessed through Google AI Studio or Google Cloud Vertex AI. Developers working with Android will also be able to use Gemini Nano. Bard and Search Generative Experiences (SGE) will also start using Gemini. Reports suggest that it is already reducing SGE response times.

At the moment, Gemini Pro is available inside Bard. Pixel 8 users can also access a version of their AI-suggested text replies with WhatsApp. Google plans to launch it on Gboard in the future. Organizations looking to build chatbots for their audience or employees can exploit this feature to provide an interactive experience that reduces manual inputs and prioritizes communication.

“With real-time user feedback, we can judge Gemini’s capabilities in a wider set of applications. We may get breaking changes, or the results may be out of this world; we cannot predict it yet. However, one thing we can say for sure is that Gemini will change how models interact with different devices.

For our clients, we are excited to implement this in projects as soon as the updates stabilize. Since the Nano engine is natively built for mobile, it opens many capabilities. We can also use the technical reports to decide what model to implement for different use cases.” adds Priyamvada.

Parting Thoughts — The Future of the AI Race

The benchmark numbers look promising, but the lead is not much. Moreover, ChatGPT has stood the test of time, and many organizations now have workflows dependent on ChatGPT 4.0. The value proposition advantage needs to be extremely high to make the switch.

Finally, safety will be the elephant in the room when any new AI models are introduced. According to Google, Gemini has been built “responsibly from the start, incorporating safeguards and working together with partners to make it safer and more inclusive.” It is a welcoming sign.

In conclusion, Gemini has set the stage for what can become equivalent to the 20th-century Space Race. At the moment, ChatGPT dominates in terms of sheer user base. 180.5 million users are reported to be fueling its training algorithm. Gemini needs to pull a Usain Bolt sprint to overtake in sheer numbers.

Whatever happens, the world will gain a lot from the AI race, provided models are built and used responsibly. When building apps, we may finally have a world where language, knowledge, and communication barriers are no longer looming problems. The possibilities look promising.

Dive deep into our research and insights. In our articles and blogs, we explore topics on design, how it relates to development, and impact of various trends to businesses.