Table of Contents

A Comprehensive Guide to Efficient Logging with EFK and TD Agent

Author

Date

Book a call

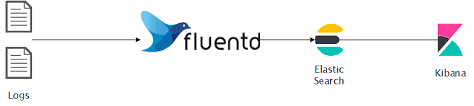

In the dynamic landscape of modern IT infrastructures, effective log management and analysis play a crucial role in maintaining systems' health, security, and performance. The EFK stack, consisting of Elasticsearch, Fluentd, and Kibana, has emerged as a powerful solution for log aggregation, storage, and visualization. When paired with the TD Agent, it becomes an even more potent tool for efficiently handling large volumes of log data.

What is EFK ? [ OS Independent ]

The EFK stack is a combination of three open-source tools:

- Elasticsearch: A distributed, RESTful search and analytics engine that serves as the storage backend for log data.

- Fluentd: An open-source data collector that unifies the data collection and consumption for better use and understanding. Fluentd collects, processes, and forwards log data to Elasticsearch.

- Kibana: A powerful visualization tool that works in conjunction with Elasticsearch to help users explore, analyze, and visualize data stored in Elasticsearch indices. Together, these components provide a comprehensive solution for log management, offering scalability, flexibility, and ease of use.

What is Td-agent? [ ubuntu22.04]

td-agent, short for Treasure Data Agent, is an open-source data collector designed for collecting, processing, and forwarding log data. It is part of the Fluentd project, which is a popular open-source log aggregator. Fluentd is designed to unify data collection and consumption for better use in real-time data analytics. Here are some key features and aspects of td-agent:

- Log Collection: td-agent is primarily used for collecting log data from various sources, such as application logs, system logs, and more. It supports a wide range of input sources.

- Data Processing: It allows filtering, parsing, and transforming log data. This ensures that the collected data is formatted and structured according to the requirements before being forwarded.

- Log Forwarding: The td-agent can forward log data to various output destinations, making it suitable for integrating with different storage systems or log analysis tools. Common output destinations include Elasticsearch, Amazon S3, MongoDB, and others.

- Fluentd Integration: td-agent uses the Fluentd logging daemon as its core. Fluentd provides a flexible and extensible architecture for log data handling. It supports a wide range of plugins, making it adaptable to various environments.

- Configuration: The configuration of td-agent is typically done through a configuration file (commonly named

td-agent.conf). This file defines input sources, processing filters, and output destinations. - Ease of Use: td-agent is designed to be easy to install and configure. It is suitable for both small-scale deployments and large, distributed systems.

- Community and Support: As an open-source project, td-agent benefits from an active community and ongoing development. It's well-documented, and users can find support through forums, documentation, and community channels.

When using td-agent, it is often part of a larger logging or monitoring solution. For example, in combination with Elasticsearch and Kibana, td-agent helps create an EFK (Elasticsearch, Fluentd, Kibana) stack for log management and analysis.

What problems were we facing before EFK?

- Manually logging in to the server to check application logs, container logs, nginx logs, etc.

- No centralized monitoring Dashboard.

- No search engine.

- No visualization tool.

- Security issues.

- Most team members have access to the server.

Prerequisites Installation Guide

Docker and Docker Compose

Docker is a containerization platform that allows you to package and distribute applications along with their dependencies. Docker Compose is a tool for defining and running multi-container Docker applications. Feel free to explore the docs.

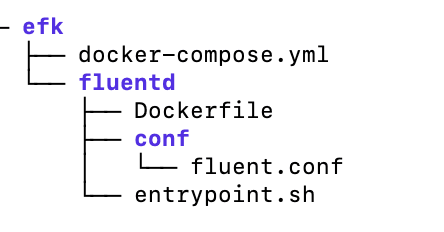

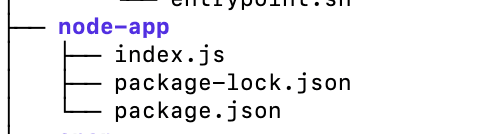

Setting Up EFK Stack on ubuntu22.04 Setting up the Elastic, Fluentd, Kibana (EFK) stack using a Docker Compose file is a convenient way to deploy and manage the stack as containers. Project Structure:

Step 1: Create Directory and Change Directory

Step 2: Create docker-compose.yml

Step 3: Create Directory and Change Directory

Step 4: Create Dockerfile

Step 5: Create entrypoint.sh

Step 6: Create Directory and Change Directory

Step 7: Create fluent.conf

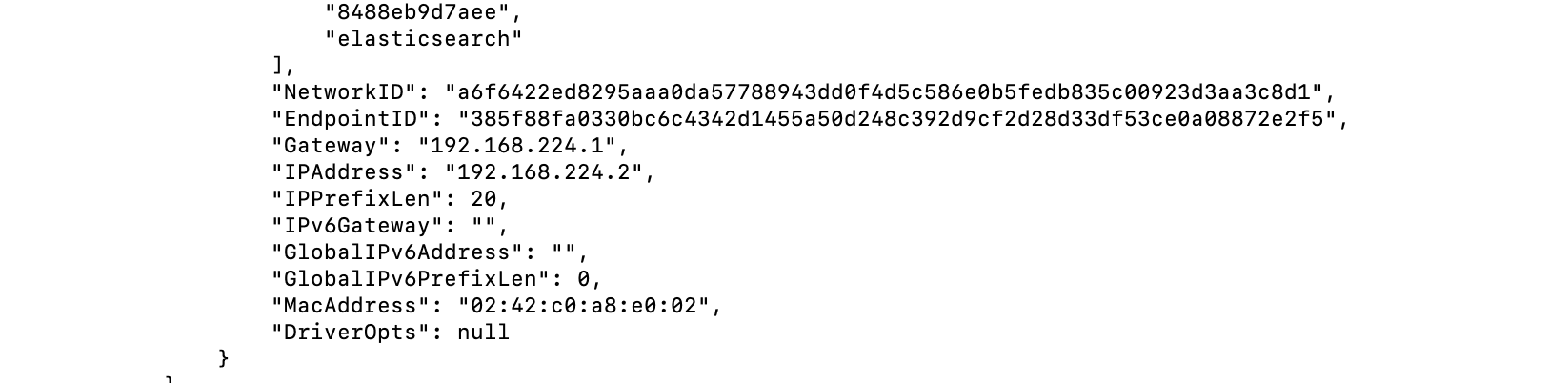

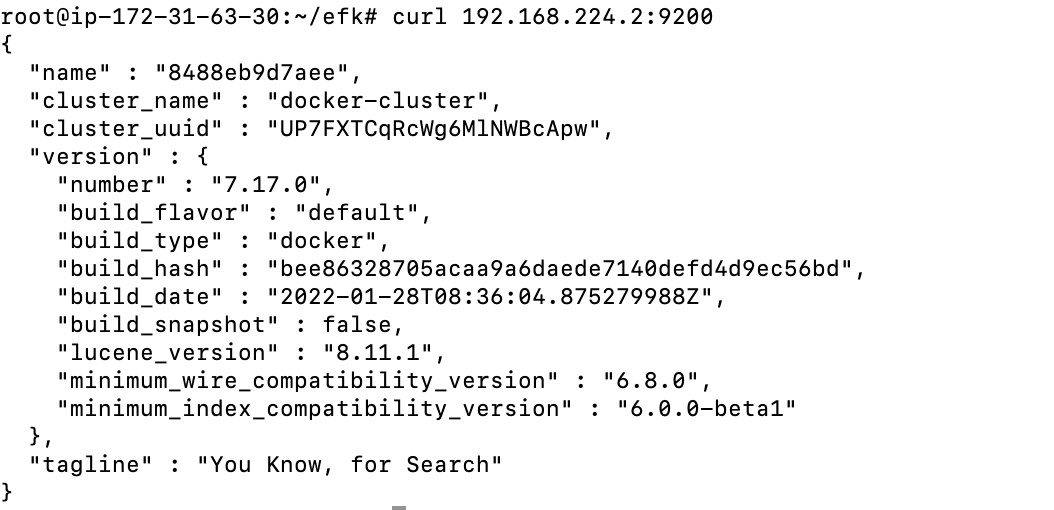

Change directory to efk and run the Docker container:

Check logs for service fluentd and kibana:

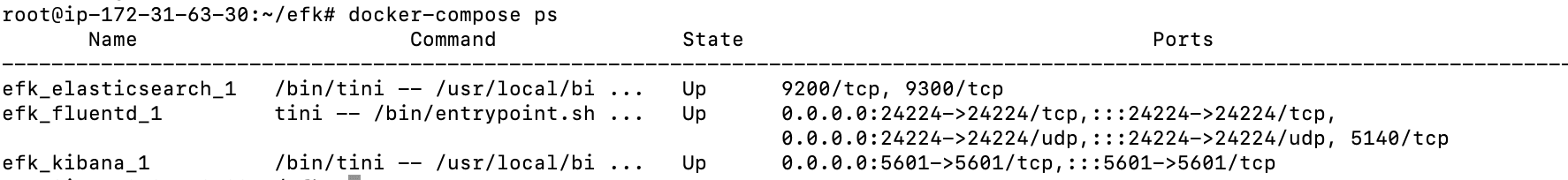

Check the running container:

On the Dashboard

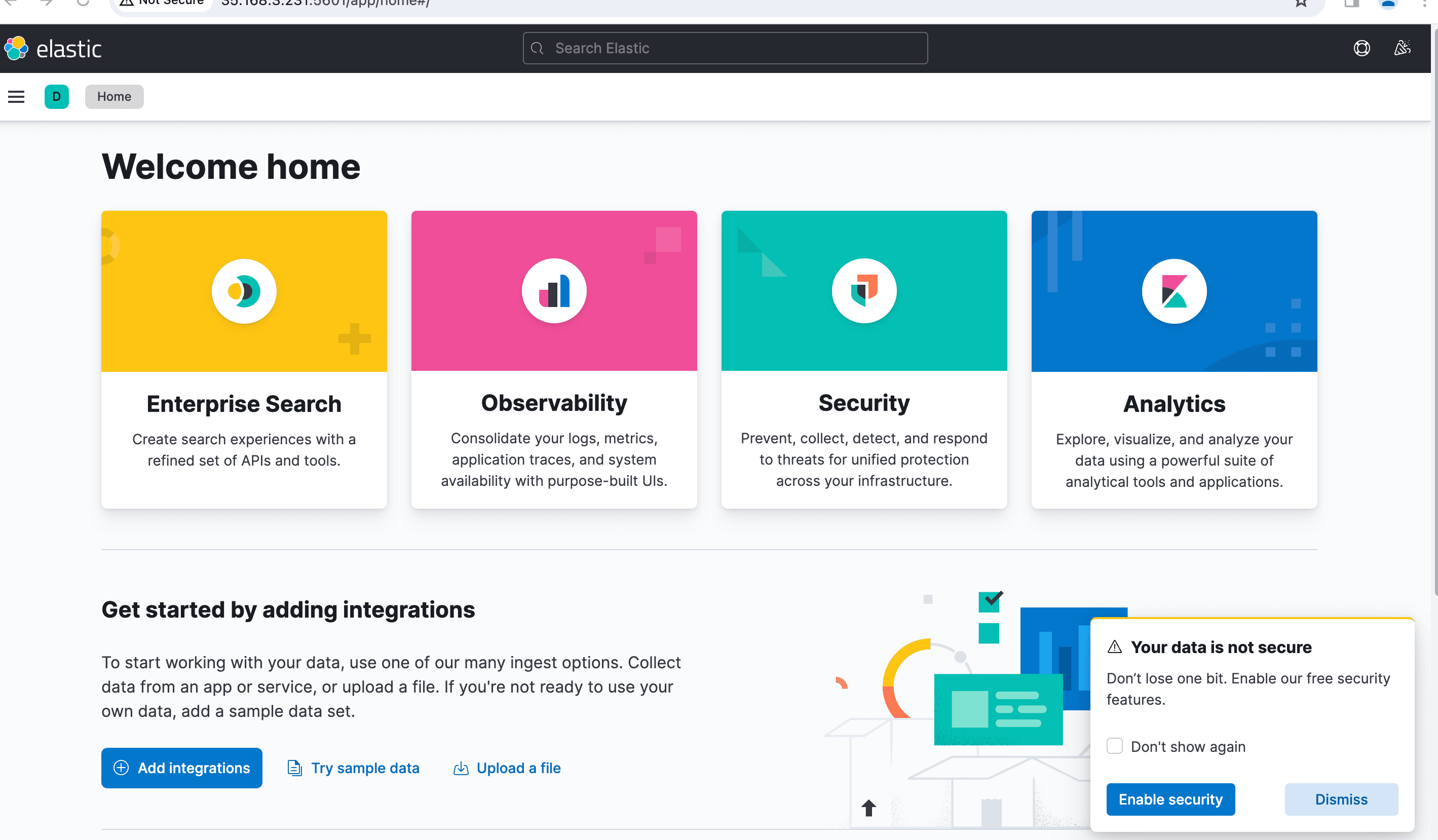

1. Public ip:5601

2. Next, click the Explore on My Own button on the welcome page below.

3. Click the Stack Management option to set up the Kibana index pattern in the Management section.

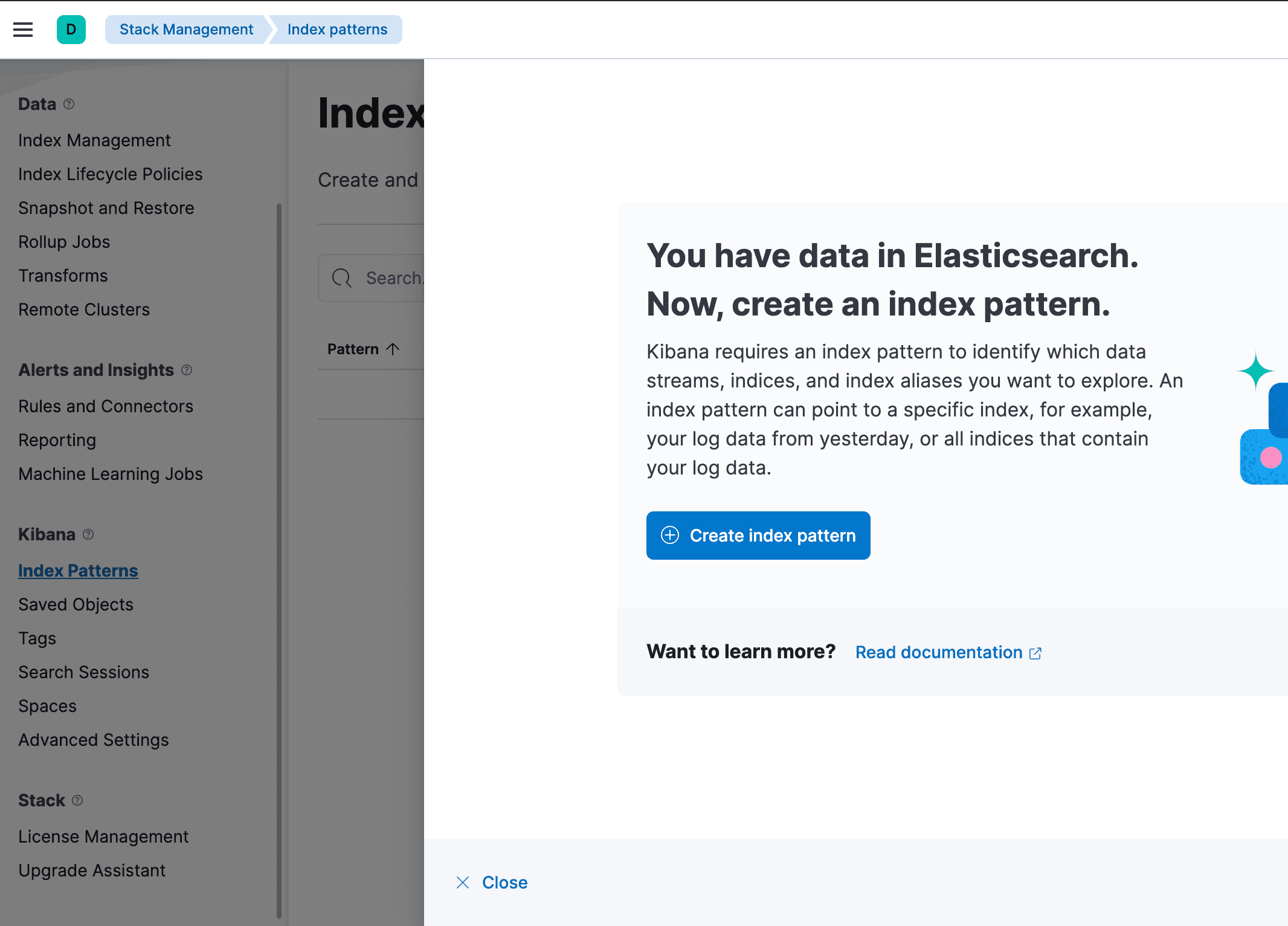

4. On the Kibana left menu section, click the menu Index Patterns and click the Create Index Pattern button to create a new index pattern.

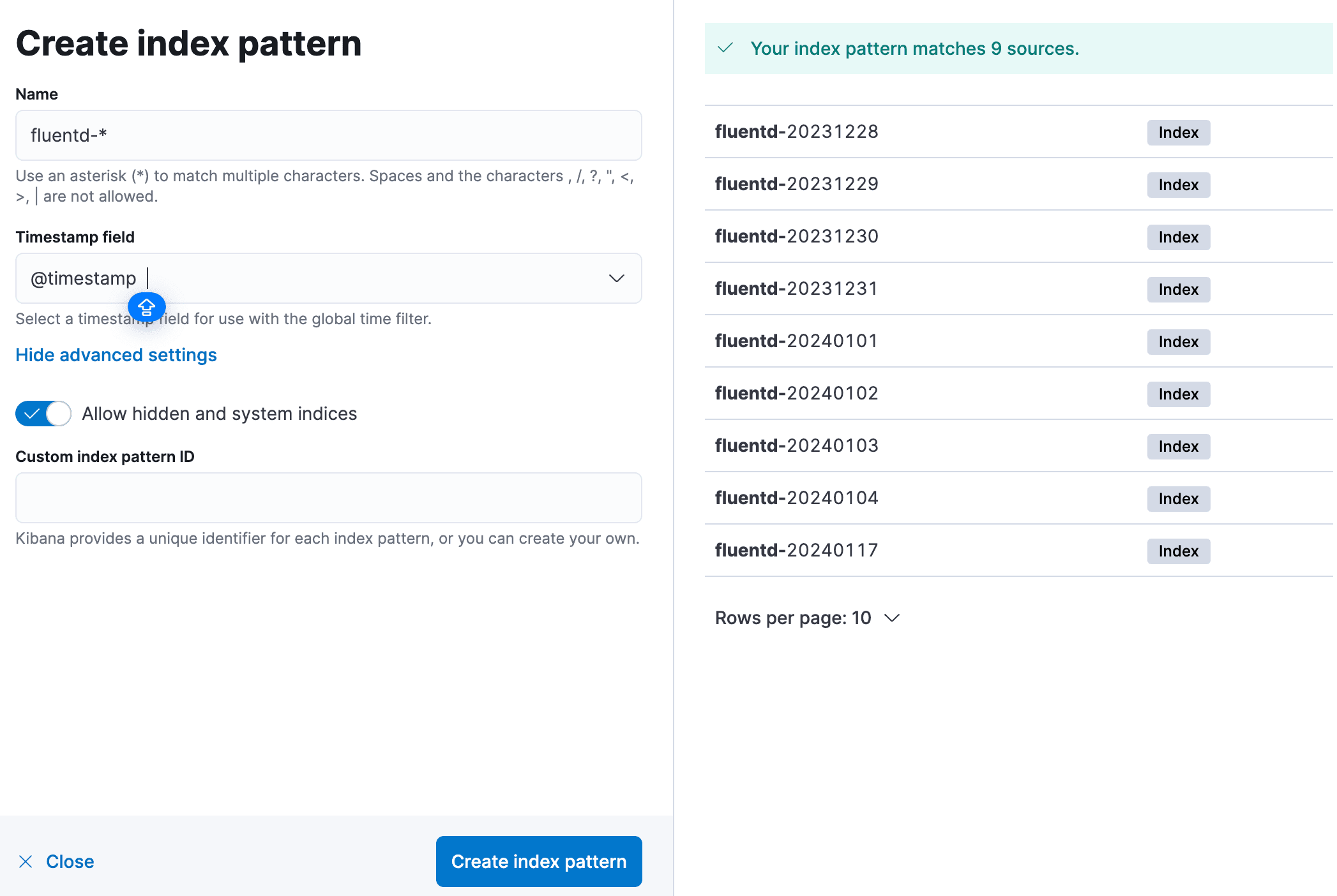

5. Now, input the index pattern Name as fluentd-*, set the Timestamp field to @timestamp, and click the Create index pattern button to confirm the index pattern settings.

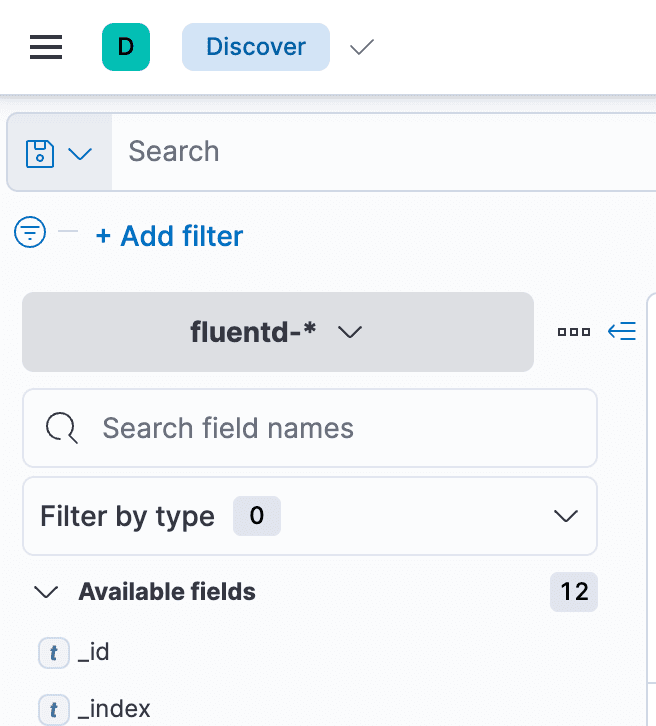

6. Lastly, click on the top left menu (ellipsis), then click the Discover menu to show the logs monitoring.

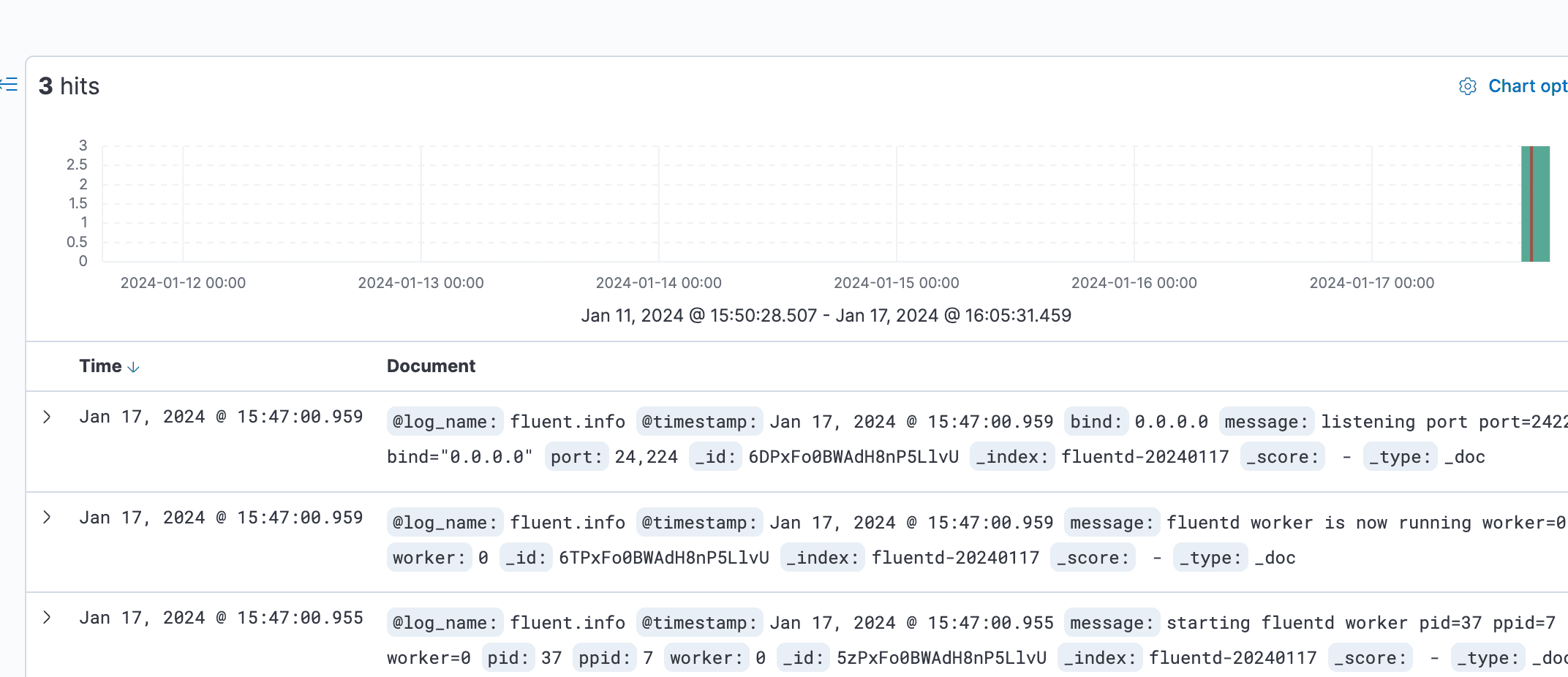

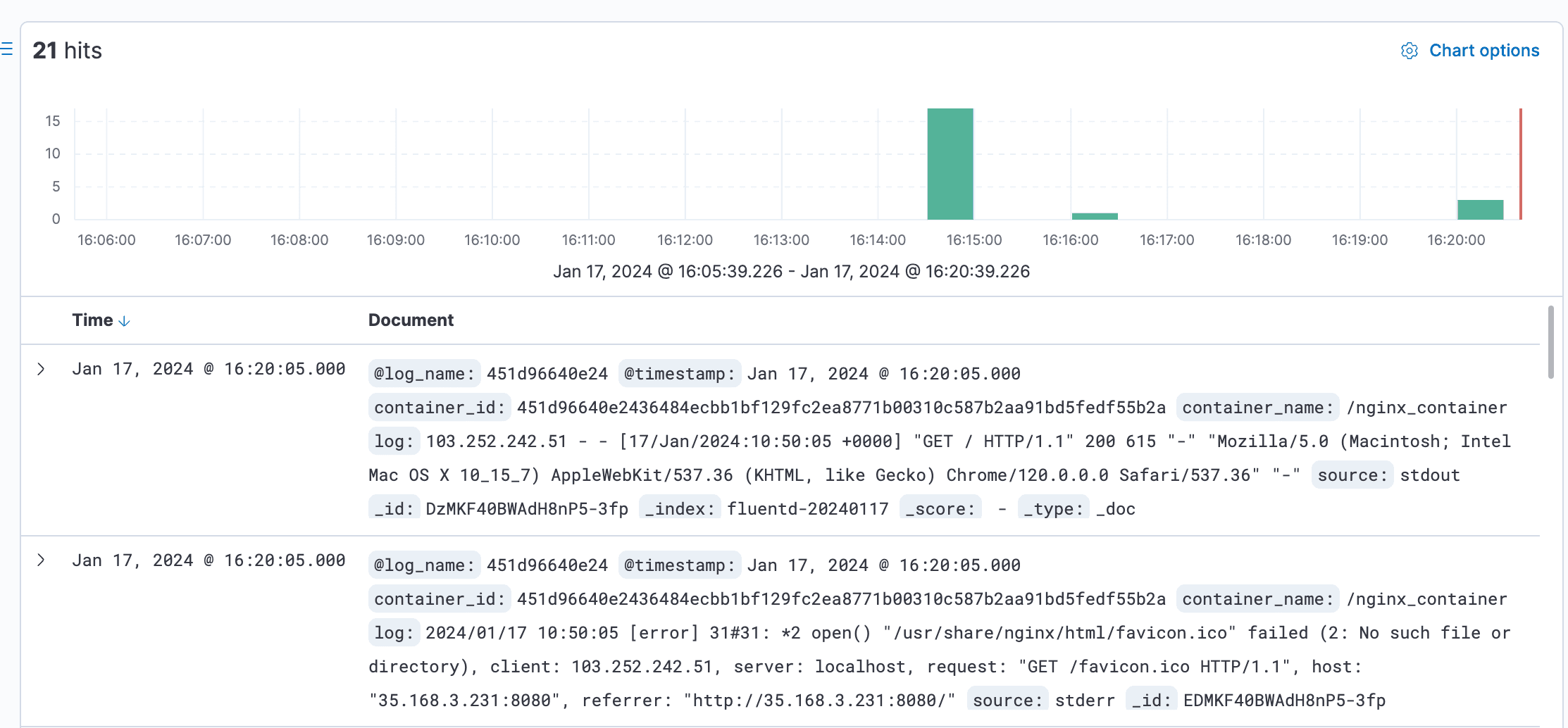

7. Below is the screenshot of the Kibana log monitoring and analysis dashboard. All listed logs are taken from the Elasticsearch and shipped by the Fluentd log aggregation.

Run a Docker container by utilizing the Fluentd log driver

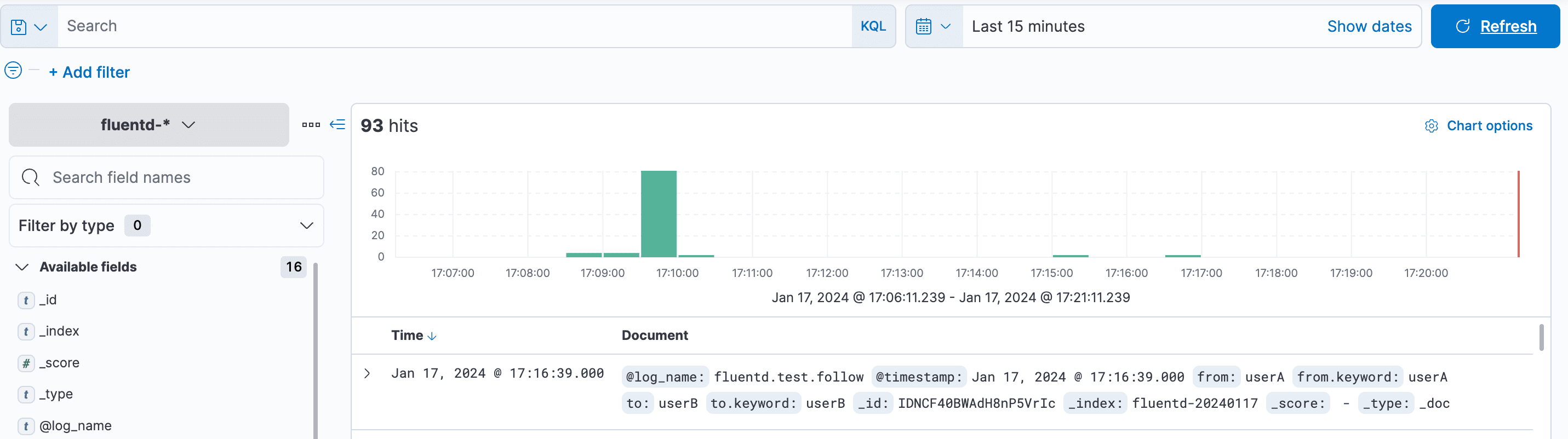

Lastly, switch back to the Kibana dashboard, and click the Discover menu on the left side.

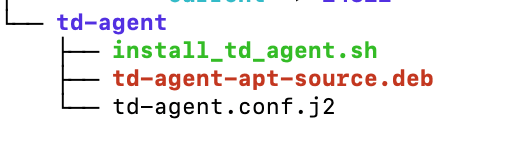

Setting Up td-agent on ubuntu22.04

Setting up TD Agent (Treasure Data Agent) using Script allows for automation. TD Agent is commonly used for log forwarding and aggregation. In this guide, we will walk through the steps to set up TD Agent using Script.

td-agent/install_td_agent.sh

td-agent/td-agent.conf.j2

Remember to execute the script with the appropriate permissions:

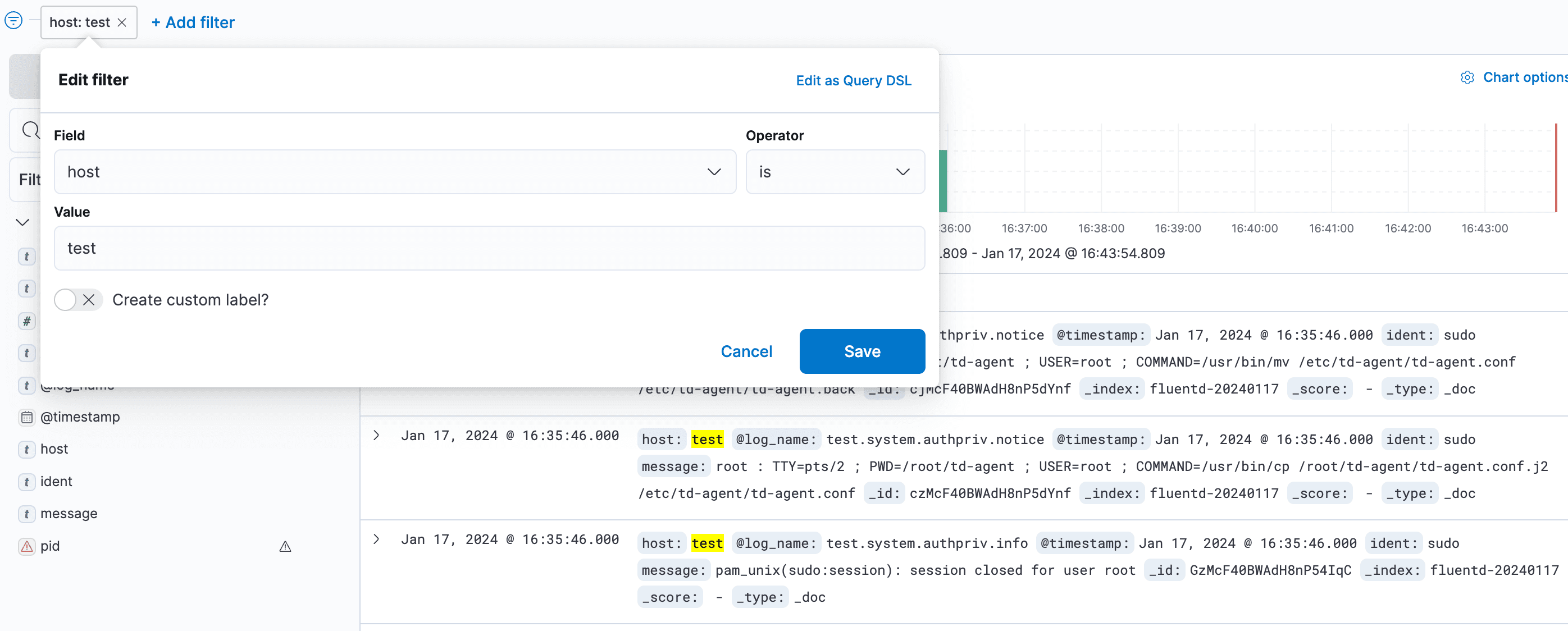

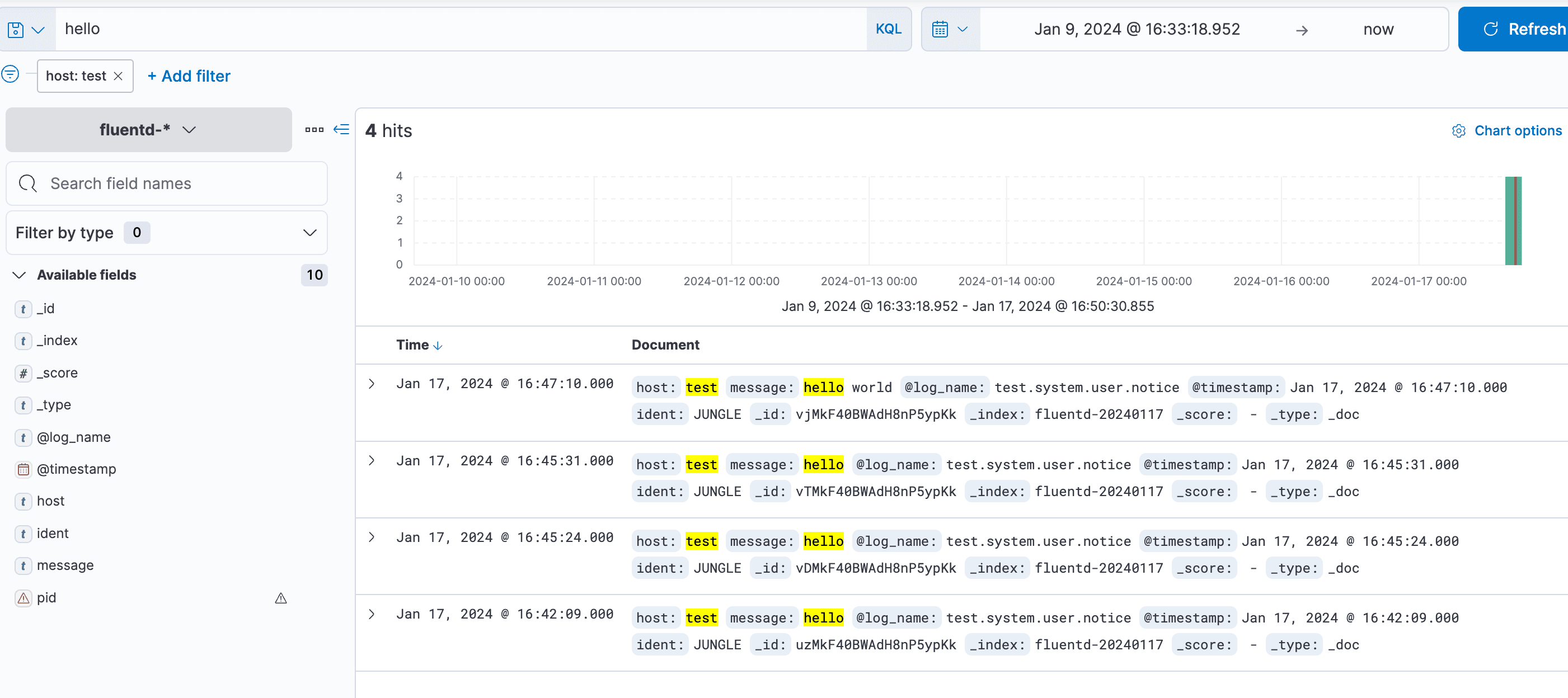

Check using filter on kibana dashboard

Testcase 1: Creating Log to Check Logging

Testcase 2: Application log@fluent-org/logger

tag- log_name: fluentd.test.follow

Prerequisites: Basic knowledge of Node.js and NPM Package.json.

Use npm to install dependencies locally:

index.js:

Run the app and go to http://public-ip:3000/ in your browser to send the logs to Fluentd:

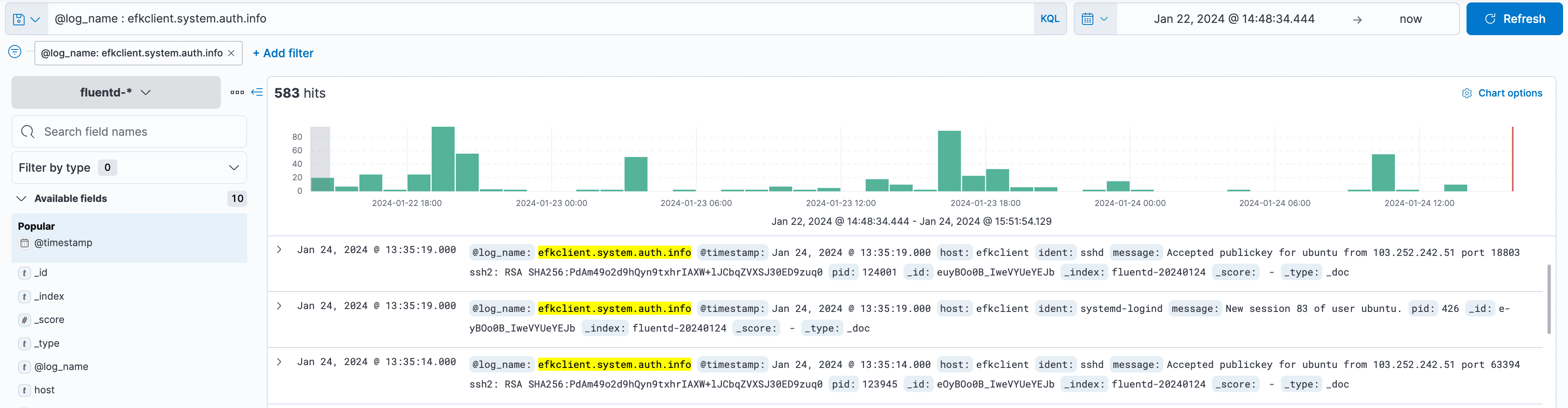

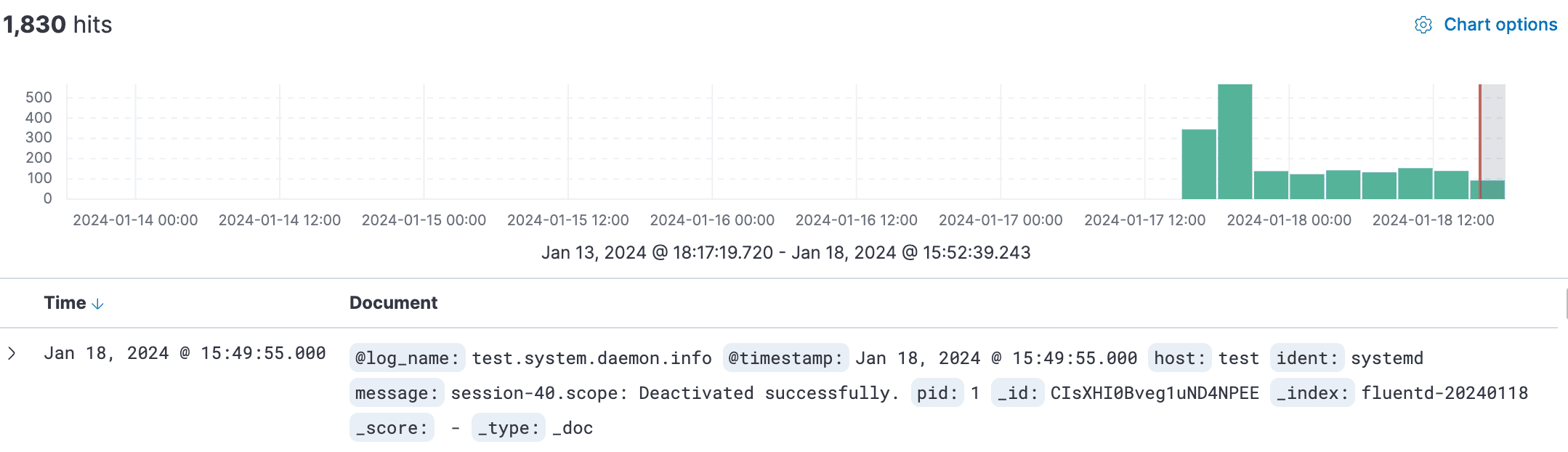

Testcase 3: auth-log

Add the following lines to fluent.conf. After adding these lines, remove the previous Docker images. Then, run 'docker-compose up' again in the ~/efk directory. tag- log_name: hostname.system.auth.info

Login to the server again to generate auth-log:

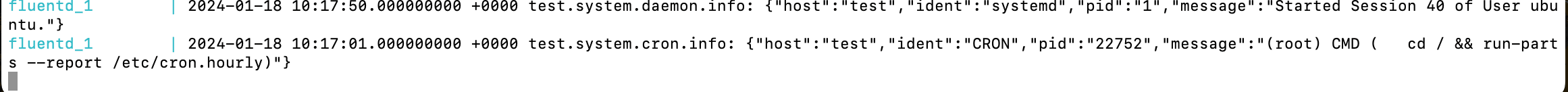

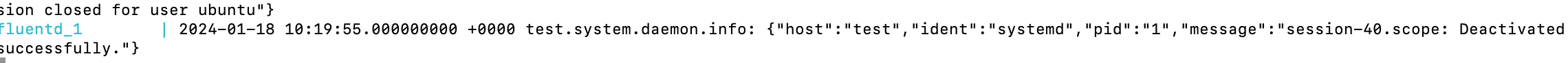

Below are fluentd Container Logs:

On Kibana Dashboard:

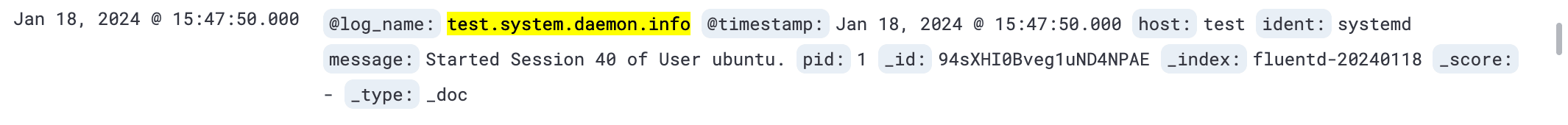

Testcase 4: Container-log

Add the following lines to fluent.conf. After adding these lines, remove the previous Docker images. Then, run 'docker-compose up' again in the ~/efk directory. tag: container name:

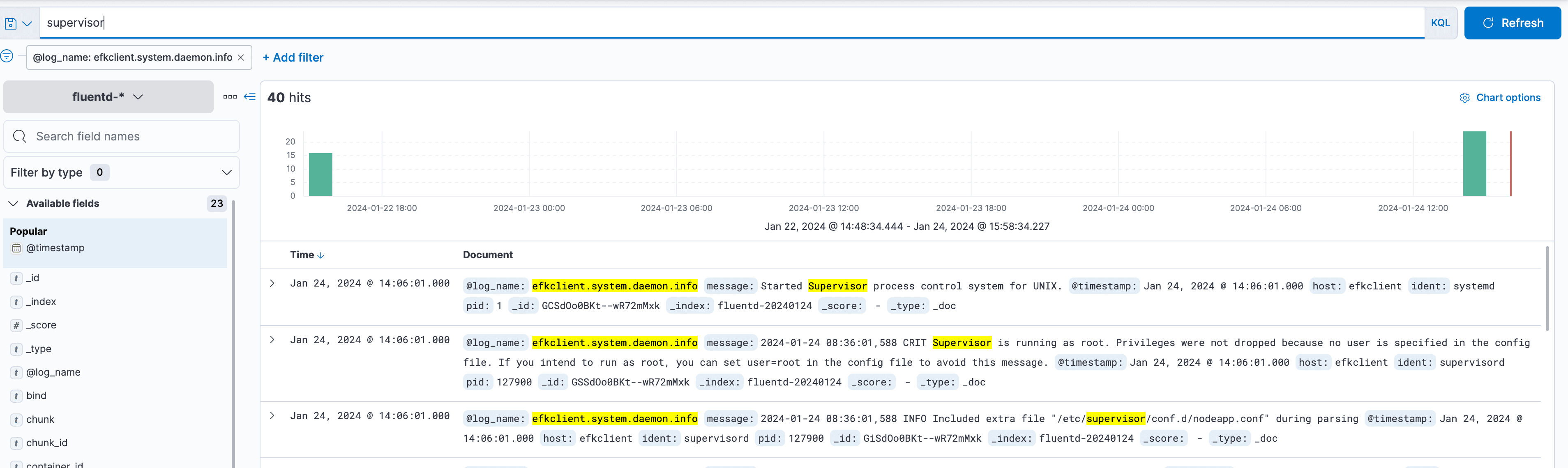

Testcase 5: Supervisor-log

Add the following lines to fluent.conf. After adding these lines, remove the previous Docker images. Then, run 'docker-compose up' again in the ~/efk directory.

tag- log_name: hostname.system.daemon.info

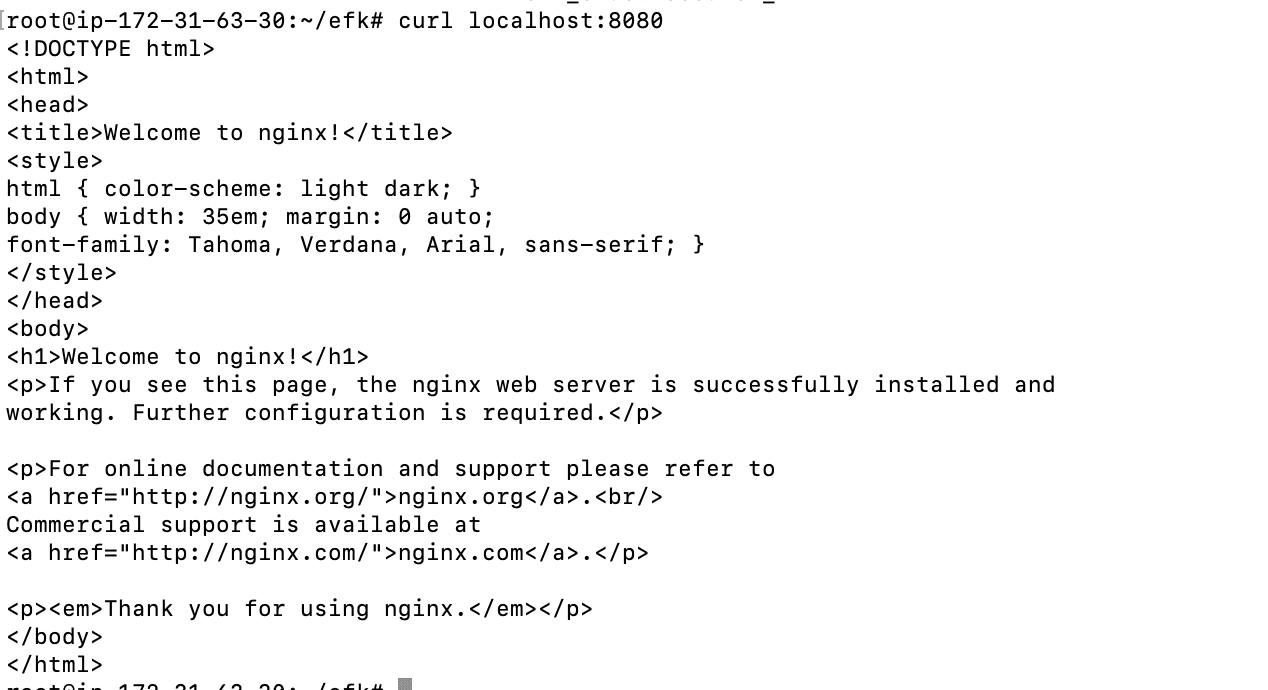

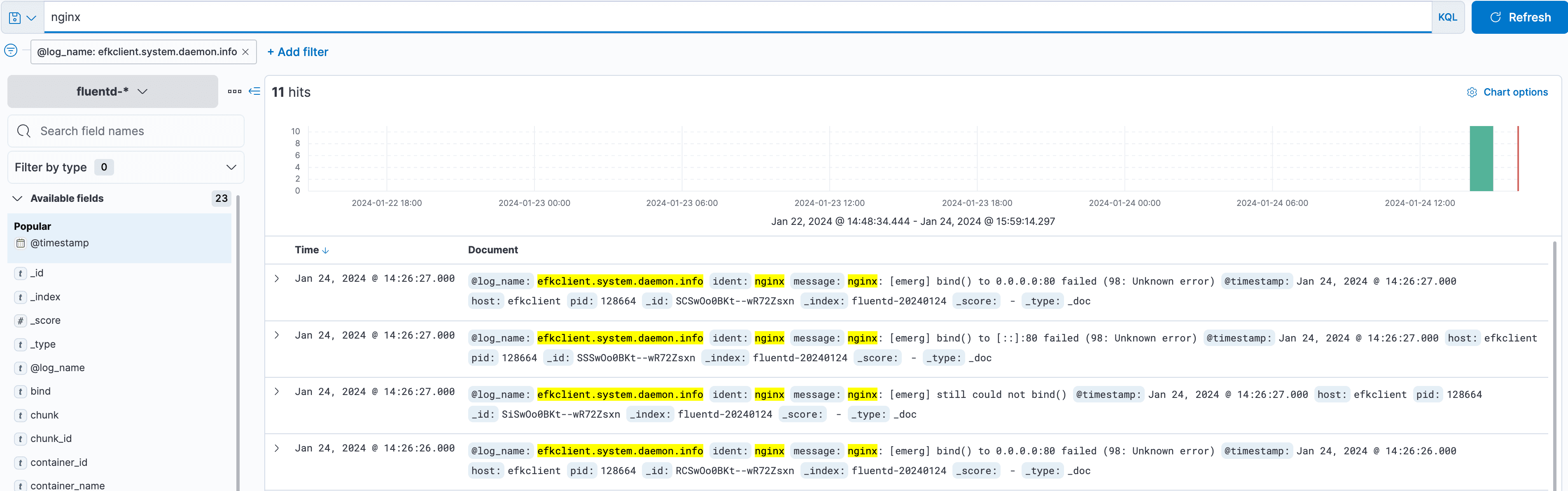

Testcase 6: nginx-log

Add the following lines to fluent.conf. After adding these lines, remove the previous Docker images. Then, run 'docker-compose up' again in the ~/efk directory. tag- log_name: hostname.system.daemon.info

Conclusion

The EFK stack provides a comprehensive logging solution that can handle a large amount of data and provide real-time insights into the behavior of complex systems. Elasticsearch, Fluentd, and Kibana work together seamlessly to collect, process, and visualize logs, making it easy for developers and system administrators to monitor their applications' and infrastructure's health and performance. If you’re looking for a powerful logging solution, consider using the EFK stack.

Check out these additional resources:

Official docs link: https://docs.fluentd.org/v/0.12/articles/docker-logging-efk-compose and https://blog.yarsalabs.com/efk-setup-for-logging-and-visualization/

OS support: Download Td-Agent and Download efk

Alternative solution: ELK [ elasticsearch logstash kibana ], Graylog, Splunk, Fluent Bit and Grafana Loki

Dive deep into our research and insights. In our articles and blogs, we explore topics on design, how it relates to development, and impact of various trends to businesses.